Improving robot learning through visual forecasting

Farming is an essential part of Minnesota’s economy, and the University of Minnesota has a long tradition of providing farmers with state-of-the-art technologies to increase productivity.

However, one area that has lagged is the use of fully autonomous robots that can solve their tasks in conjunction with humans. This absence of autonomous robots in our lives can be attributed to several factors, namely: the difficulties of perception algorithms to operate robustly in uncontrolled and “busy” environments; the necessity of robust sensing, control, and motion planning protocols for safe operation as robots move around other machinery, animals, and humans; and inefficient learning behavior making it hard to learn robot behaviors solely through interactions with the environment.

CS&E Ph.D. student Nicolai Haeni, who was a 2019-20 UMII MnDRIVE PhD Graduate Assistant, studied two problems related to this topic: novel view synthesis (NVS) and robotic grasping of soft objects.

- NVS is the ability to generate unseen or occluded parts of an object from a single camera view. In the future, this could potentially allow a robot to predict a dangerous situation before it happens. This project presented continuous object representation networks (CORN), a conditional architecture that encodes an input image’s geometry and appearance and maps it to a 3D consistent scene representation. Using this 3D representation, the model can render desired novel views from a single input image.

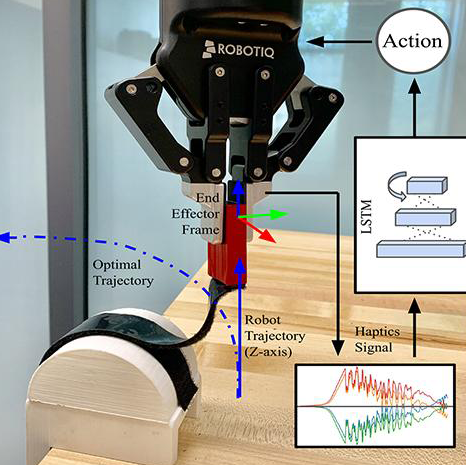

- Learning object manipulation is a critical skill for robots to interact with their environment. While rigid object manipulation has been studied extensively, non-rigid objects present a greater challenge. This project presented the task of peeling Velcro as a possible application of robotic manipulation of non-rigid objects. The developed model used only tactile sensor inputs for effective Velcro peeling.

Haeni, who works with professor Volkan Isler in the Robotic Sensor Networks Lab, used the Minnesota Supercomputing Institute extensively for this research. He participated in the 2021 MSI Research Exhibition in April 2021, presenting a poster related to this project called Continuous Object Representation Networks: Novel View Synthesis Without Target View Supervision (authors: Nicolai Haeni, Selim Engin, Jun-Jee Chao, Volkan Isler).

He has also co-authored two papers on the project:

- J Yuan, N Haeni, V Isler. Multi-Step Recurrent Q-Learning for Robotic Velcro Peeling. IEEE International Conference on Robotics and Automation (ICRA), 2021.

- N Haeni, S Engin, J-J Chao, V Isler. Continuous Object Representation Networks: Novel View Synthesis Without Target View Supervision. Advances in Neural Information Processing Systems (NeurIPS), 33, 2020.