New Storage Technologies Research Projects

Shingled Magnetic Recording

Shingled Magnetic Recording (SMR): Layout Designs, Performance Evaluations and Applications

Shingled Magnetic Recording (SMR) technology is largely based on the current hard disk drive (HDD) drive with sequential writes to partially-overlapped tracks to increase the areal density limitation of HDDs. Such SMR drives can benefit large-scale storage systems by reducing the Total Cost of Ownership (TCO) and meeting the challenge of the explosive data growth.

To embrace the challenge and opportunities brought by SMR technology, our group are conducting SMR related research (Figure 1) that consists of the following dimensions:

SMR Drive Internal Data Layout Designs. To expand the SWD applicability instead of only supporting archival or backup systems, we have designed both static and dynamic track level mapping schemes to reduce the write amplification and garbage collection overhead. Furthermore, we have implemented a simulator that enables us to evaluate various SMR drive data layout designs under different workloads.

SMR Drive Performance Evaluations. Building SMR drive based storage systems calls for a deep understanding of the drives performance characteristics. To accomplish this, we have carried out in-depth performance evaluations on HA-SMR drives with a special emphasis on the performance implications of the SMR-specific APIs and how these drives can be deployed in large storage systems.

SMR Drive Based Applications. Based the experience on SMR layout design and performance evaluation, we are investigating various potential SMR drive based applications such as SMR RAID system, SMR based key-value store, SMR drive backed deduplication system, SMR friendly filesystem, etc.

SSD as SPU

Solid-State Drive as a Storage Processing Unit

Previous researches have shown performing computation close to data would improve system performance in terms of corresponding time and energy consumption, especially for IO intensive applications. As the role of flash memory increases in storage architectures, solid-state drives have gradually displaced the hard disk drive with much shorter access latency and lower power consumption. Based on the development of solid-state drives, some researchers proposed active flash architecture to perform IO intensive applications inside the storage device by using an embedded controller in SSD. However, since that embedded controller besides implementing flash translation layer to emulate SSD as HDD, it also needs to communicate with host interface to transfer required data. So, the extra computation capacity can be utilized to performance other application is quite limited. To maximize the computation capacity on the SSD, we propose multiple processors design called storage processing unit (SPU).

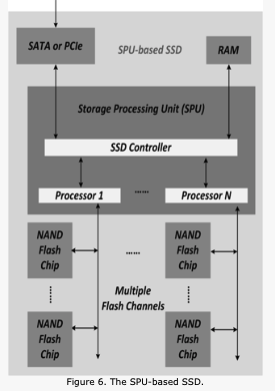

Figure 6 shown an SSD block diagram based on the proposed SPU. Besides the SSD controller, the SPU integrates a processor into each flash channel, N is the total flash channel numbers. Since every flash channel and processor is independent with each other, so that parallel computation can be performed in the SPU, which improves both computation throughput and response time.

To evaluate the proposed SPU, we implement MySQL with TPC-H benchmark on two different system: a conventional SSD-based system (baseline system), and the SPU system. In the baseline system, the database application is implemented in host CPU. Since conventional database system uses row-oriented data layout and need to transfer entire database table to host for processing, so transferring those unnecessary data tuples consumed IO bandwidth and degraded overall performance. In the SPU-based system, we offloading partial computation task into SPU by using Flash Scan/Join algorithm and only return the processed result to host. Since SPU only transfers required data to host and perform computation parallel, so both IO and computation time have a significant improvement.

Compared with conventional systems, the SPU-based system reduces both computation time and energy consumption. The reduced energy allows for more scalability in a cloud like environment. Then the high power cost of a CPU is amortized over a large population of inexpensive processors in the SPU. This allows for significantly more compute resources in a given footprint of a system power budget. In summary, the proposed SPU will significantly benefit IO intensive applications in both response time and energy consumption.

SSD Data/Metadata Reliability

Exploring Reliability and Consistency of SSD Data and Metadata

Flash memory is an emerging storage technology that shows tremendous promise to compensate for the limitations of current storage devices. Wakeup / recovery time of SSD's which are used in ultrabook etc is a critical parameter. Large number of alternatives available for address mapping and journaling schemes has varied impact on these parameters. Project aims to investigate and evaluate the tradeoffs associated with the address mapping, journaling schemes on wakeup / recover time while maintaining the reliability and consistency of data and metadata stored on the flash memory.

SSD Wakeup / Recovery time: It is the time required for the SSD controller to bring data and metadata in a consistent state upon startup / wakeup.

This process involves following steps

- It involves address mapping update in the on disk copy of address mapping table for the data pages whose address mapping did not reach on disk copy of address mapping table.

- Reconstruction of upper levels of address mapping table(SRAM copy) from the leaf level of on disk copy.

- Reconstruction of other metadata (freespace info etc) from the on disk copy of the same.

The multi channel, multi chip architecture of SSD offers the parallelism at channel, chip, die and plane level. It coupled with the use of advanced commands can greatly help to reduce the wakeup / recovery time. Project involves investigation of its impact on wear leveling, garbage collection, read / write performance.

Kinetic Drives

Research on Kinetic Drives

As the cloud storage is becoming more important, many data intensive applications are gaining foothold in the research and industry space. There is a huge rise in the unstructured data. This has been predicted by the International Data Corporation that 80% of the 133 exabytes of the global data growth in 2017 would be from unstructured data.

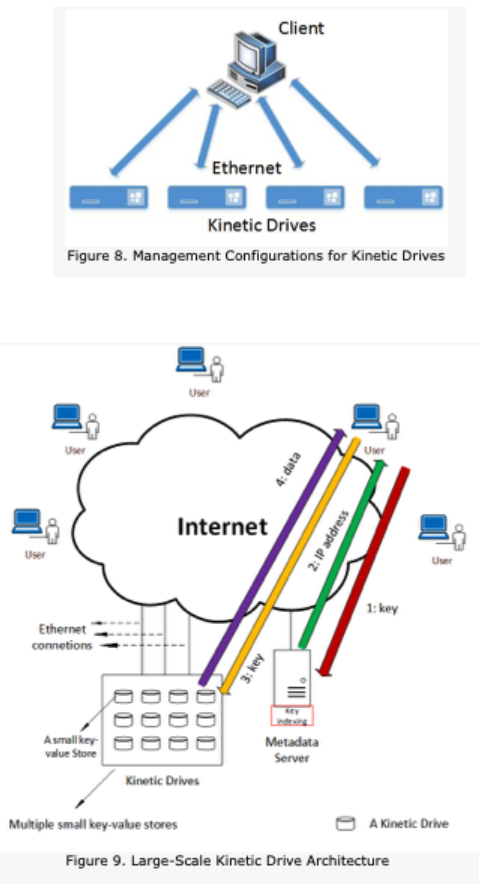

To manage the huge data storage requirements Seagate recently launched Kinetic direct-access-over-Ethernet hard drives. These drives incorporate a LevelDB key-value store inside each drive. A Kinetic Drive can be considered as an independent server and could be accessed via Ethernet, which is shown as Figure 8.

In this project, we are looking at several research issues of Kinetic Drives in the architecture of Figure 9.

- In this work, we employ micro and macro benchmarks to help understand the performance limits, trade-offs, and implications of replacing traditional hard drives with Kinetic drives in data centers and high performance systems. We test latency, throughput, and other relevant tests using different benchmarks including Yahoo Cloud Serving Benchmarks.

- We compare the results obtained as mentioned previously with a SATA-based and a SAS-based traditional server running LevelDB. We find out that the Kinetic Drives are CPU-bound but give an average throughput of 63 MB/sec for sequential writes and sequential read throughput of 78 MB/sec for 1 MB value sizes. They also demonstrate unique Kinetic features including direct disk-to-disk data transfer. With our tests we also demonstrated unique features of Kinetic drives such as P2P data transfer.

- In a large-scale key-value store system, there are many Kinetic Drives and outside users as shown in the following figure. There are also metadata server(s) that manage the Kinetic Drives. In this project, we design key indexing tables on the metadata server(s) and key-value allocation schemes on those Kinetic Drives, in order to map key-value pairs to disk locations.

- We are also looking at search requests in a large-scale key-value store using Kinetic Drives. Given attributes of the data from the users, the key-value store system should quickly find out the correct Kinetic Drives that store the data.