Past Events

Equivariant machine learning

Tuesday, Dec. 6, 2022, 1:25 p.m. through Tuesday, Dec. 6, 2022, 2:25 p.m.

Walter 402 and virtually by Zoom (Zoom registration required)

Data Science Seminar

Soledad Villar (John Hopkins University)

Abstract

In this talk we will give an overview of the enormous progress in the last few years, by several research groups, in designing machine learning methods that respect the fundamental symmetries and coordinate freedoms of physical law. Some of these frameworks make use of irreducible representations, some make use of high-order tensor objects, and some apply symmetry-enforcing constraints. Different physical laws obey different combinations of fundamental symmetries, but a large fraction (possibly all) of classical physics is equivariant to translation, rotation, reflection (parity), boost (relativity), units scalings, and permutations. We show that it is simple to parameterize universally approximating polynomial functions that are equivariant under these symmetries, or under the Euclidean, Lorentz, and Poincare groups, at any dimensionality d. The key observation is that nonlinear O(d)-equivariant (and related-group-equivariant) functions can be universally expressed in terms of a lightweight collection of (dimensionless) scalars -- scalar products and scalar contractions of the scalar, vector, and tensor inputs. We complement our theory with numerical examples that show that the scalar-based method is simple, efficient, and scalable, and mention ongoing work on cosmology simulations.

How to optimize a power grid

Friday, Dec. 2, 2022, 1:25 p.m. through Friday, Dec. 2, 2022, 2:25 p.m.

Walter Library 402

Industrial Problems Seminar

Austin Tuttle (Open Systems International)

Abstract

There are more choices for a career than data scientist!

I will talk about what it is like to work as a software developer, my experience in industry, and how I got here.

Power systems are getting increasingly complex(distributed generation, more interconnections) and automated(more measurements and remote controls). Sifting through all this new data is a very complex problem and with the large diversity of power systems around the world there are many problems that can arise.

We will discuss some of the mathematics that shows up when managing a power grid. And discuss several problems that we solve. At a high level these relate to:

- How do you provide situation awareness to a grid operator

- Can you efficiently detect violations and resolve them with minimal intervention

- Utilize grid components to minimize power losses while maintaining grid stability

- Detect an outage, restore customers, locate the cause, and dispatch crews.

Benefits of Weighted Training in Machine Learning and PDE-based Inverse Problems

Tuesday, Nov. 29, 2022, 1:25 p.m. through Tuesday, Nov. 29, 2022, 2:25 p.m.

Walter Library 402 or Zoom

Data Science Seminar

Yunan Yang (ETH Zürich)

You may attend the talk either in person in Walter 402 or register via Zoom. Registration is required to access the Zoom webinar.

Many models in machine learning and PDE-based inverse problems exhibit intrinsic spectral properties, which have been used to explain the generalization capacity and the ill-posedness of such problems. In this talk, we discuss weighted training for computational learning and inversion with noisy data. The highlight of the proposed framework is that we allow weighting in both the parameter space and the data space. The weighting scheme encodes both a priori knowledge of the object to be learned and a strategy to weight the contribution of training data in the loss function. We demonstrate that appropriate weighting from prior knowledge can improve the generalization capability of the learned model in both machine learning and PDE-based inverse problems.

Probabilistic Inference on Manifolds and Its Applications in 3D Vision

Tuesday, Nov. 22, 2022, 1:25 p.m. through Tuesday, Nov. 22, 2022, 2:25 p.m.

Data Science Seminar

Tolga Birdal (Imperial College London)

Registration is required to access the Zoom webinar.

Abstract

Stochastic differential equations have lied at the heart of Bayesian inference even before being popularized by the recent diffusion models. Different discretizations corresponding to different MCMC implementations have been useful in sampling from non-convex posteriors. Through a series of papers, Tolga and friends have demonstrated that this family of methods are well applicable to the geometric problems arising in 3D computer vision. Before inviting the rest of this community to geometric diffusion models, Tolga will share his perspectives on two topics: (i) foundational tool of Riemannian MCMC methods for geometric inference and (ii) applications in probabilistic multi view pose estimation as well as inference of combinatorial entities such as correspondences. If time permits, Tolga will continue with his explorations in optimal-transport driven non-parametric methods for inference on Riemannian manifolds. Relevant papers include:

Bayesian Pose Graph Optimization [NeurIPS 2018]

Pobabilistic Permutation Synchronization [CVPR 2019 Honorable Mention]

Synchronizing Probability Measures on Rotations [CVPR 2020]

Shaping Your Own Career as a Mathematical Biologist

Friday, Nov. 18, 2022, 1:25 p.m. through Friday, Nov. 18, 2022, 2:25 p.m.

Zoom

Industrial Problems Seminar

Nessy Tania

Senior Principal Scientist

Quantitative Systems Pharmacology, Early Clinical Division

Pfizer Worldwide Research, Development, and Medical

The event will be held virtually via Zoom. Registration is required to access the Zoom webinar.

Abstract

In this talk, I will share some of my personal journey as a math biologist and applied mathematician who had pursued a tenure-track position in academia and is now working as a research scientist in the biopharma industry. I will discuss similarities and differences, rewards and challenges that I have encountered in both positions. On a more practical aspect, I will discuss how current trainees can prepare for a career in industry (specifically biopharma) and how to seek those opportunities. I will also describe the emerging field of Quantitative Systems Pharmacology (QSP): its deep root in mathematical biology and how it is currently shaping the drug development process. Finally, I will share some of my own ongoing work as a QSP modeler who is supporting the Rare Disease Research Unit at Pfizer. As a key takeaway, I hope to share that there are multiple paths to success and a rewarding and stimulating career in applied mathematics.

A PDE-Based Analysis of the Symmetric Two-Armed Bernoulli Bandit

Tuesday, Nov. 15, 2022, 1:25 p.m. through Tuesday, Nov. 15, 2022, 2:25 p.m.

Walter Library 402 or Zoom

Data Science Seminar

Vladimir Kobzar (Columbia University)

You may attend the talk either in person in Walter 402 or register via Zoom. Registration is required to access the Zoom webinar.

The multi-armed bandit is a classic sequential prediction problem. At each round, the predictor (player) selects a probability distribution from a finite collection of distributions (arms) with the goal of minimizing the difference (regret) between the player’s rewards sampled from the selected arms and the rewards of the arm with the highest expected reward. The player’s choice of the arm and the reward sampled from that arm are revealed to the player, and this prediction process is repeated until the final round. Our work addresses a version of the two-armed bandit problem where the arms are distributed independently according to Bernoulli distributions and the sum of the means of the arms is one (the symmetric two-armed Bernoulli bandit). In a regime where the gap between these means goes to zero and the number of prediction periods approaches infinity, we obtain the leading order terms of the expected regret for this problem by associating it with a solution of a linear parabolic partial differential equation. Our results improve upon the previously known results; specifically we explicitly compute the leading order term of the optimal regret in three different scaling regimes for the gap. Additionally, we obtain new non-asymptotic bounds for any given time horizon. This is joint work with Robert Kohn available at https://arxiv.org/abs/2202.05767.

Using cloud computing? You might benefit from data science!

Friday, Nov. 11, 2022, 1:25 p.m. through Friday, Nov. 11, 2022, 2:25 p.m.

Walter Library 402 or Zoom

Industrial Problems Seminar

Marc Light (Censys.io)

You may attend the talk either in person in Walter 402 or register via Zoom. Registration is required to access the Zoom webinar.

Organizations are doing more and more of their computing using cloud resources. Ideally, this computing would be performant, reliable, and efficient (PRE, as they say at Meta Infra). Drawing on 2.5 years of managing a team of 10 infrastructure data scientists at Meta, I describe classes of use cases and approaches to making data-driven decisions and generally improving PRE using ML/OR techniques. Clearly, large companies like Meta, Google, Amazon, Microsoft, etc. could receive a huge return-on-investment from such work. But increasingly, even medium-sized organizations in cybersecurity, energy analytics, healthcare, etc., could benefit from DS/ML/OR for the effective use of cloud computing.

Three Uses of Semidefinite Programming in Approximation Theory

Tuesday, Nov. 8, 2022, 1:25 p.m. through Tuesday, Nov. 8, 2022, 2:25 p.m.

Walter Library 402 or Zoom

Data Science Seminar

Simon Foucart (Texas A & M University)

You may attend the talk either in person in Walter 402 or register via Zoom. Registration is required to access the Zoom webinar.

In this talk, modern optimization techniques are publicized as fitting computational tools to attack several extremal problems from Approximation Theory which had reached their limitations based on purely analytical approaches. Three such problems are showcased: the first problem---minimal projections---involves minimization over measures and exploits the moment method; the second problem---constrained approximation---involves minimization over polynomials and exploits the sum-of-squares method; and the third problem---optimal recovery from inaccurate observations---is highly relevant in Data Science and exploits the S-procedure. In each of these problems, one ends up having to solve semidefinite programs.

Seek Truth, Create Value — An engineer’s perspective on 3M “Science. Applied to Life.”

Friday, Nov. 4, 2022, 1:25 p.m. through Friday, Nov. 4, 2022, 2:25 p.m.

Walter Library 402 or Zoom

Industrial Problems Seminar

Fay Salmon (3M)

You may attend the talk either in person in Walter 402 or register via Zoom. Registration is required to access the Zoom webinar.

How do engineers apply their technical training to discover truth, advance understanding, and transform knowledge into products to improve our everyday life? Fay Salmon, a Staff Scientist with 3M Corporate Research Systems Laboratory, will discuss some of the work she and her team have done to uncover physical mechanisms to develop and improve various 3M products from Cubitron II abrasives to Optically Clear Adhesives to VHB Structural Glazing Tapes. Driven by the belief that behind every phenomenon there are underlying governing principles that can be discovered, she will discuss how curiosity and questioning, followed by a systematic and collaborative approach can lead to depth of understanding. This understanding provides a foundation on which to innovate and create value through development of robust and high-performance products.

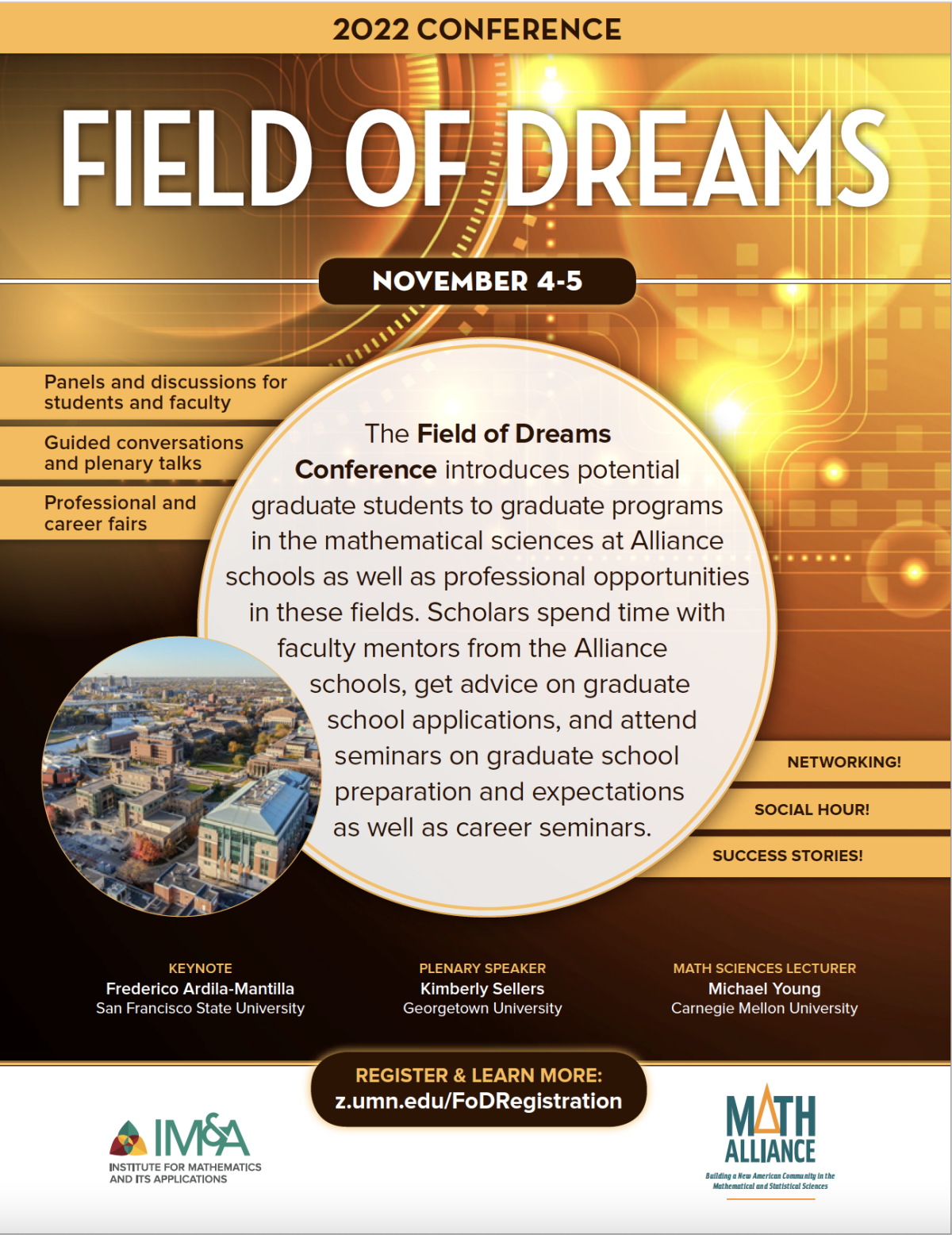

2022 Field of Dreams Conference

Friday, Nov. 4, 2022, 8 a.m. through Saturday, Nov. 5, 2022, 5 p.m.

University of Minnesota

Organizers

The Field of Dreams Conference introduces potential graduate students to graduate programs in the mathematical sciences at Alliance schools as well as professional opportunities in these fields. Scholars spend time with faculty mentors from the Alliance schools, get advice on graduate school applications, and attend seminars on graduate school preparation and expectations as well as career seminars.

Tentative list of speakers

- Federico Ardila-Mantilla (San Francisco State University) (confirmed)

- Kimberly Sellers (University of Pennsylvania) (confirmed)

- Michael Young (Carnegie Mellon University) (confirmed)