Interfaces Vol. 3 (2022)

Essays and Reviews in Computing and Culture

Interfaces publishes short essay articles and essay reviews connecting the history of computing/IT studies with contemporary social, cultural, political, economic, or environmental issues. It seeks to be an interface between disciplines, and between academics and broader audiences.

Co-Editors-in-Chief: Jeffrey R. Yost and Amanda Wick

Managing Editor: Melissa J. Dargay

+

2022 (Vol. 3) Table of Contents

Decentralized Governance Patterns: A Study of "Friends With Benefits" DAO -- K. Nabben

Longitudinal Hype: Terminologies Fade, Promises Stay -- V. Galanos

AI Surveillance is Not a Solution for Quiet Quitting -- M. Hickok

Framing the Computer -- K. Gibbons

Henry Dreyfuss, User Personas, and the Cybernetic Body -- C. Burke

What We Are Learning About Popular Uses of Information, The American Experience -- J. Cortada

Few Women on the Block: Legacy Codes and Gendered Coins -- J. Yost

What happens when you take the most edgelordy language on the internet and train a bot to produce more of it? Enter the cheekily-named GPT-4chan. Feed it an innocuous seed phrase and it might reply with a racial slur (Cramer, 2022a) or a rant about illegal immigrants (Austin Anderson, 2022) Or ask it how to get a girlfriend and it will tell you "by taking away the rights of women" (JJADX, 2022).

Released in early June to great controversy among AI ethicists and machine learning researchers, GPT-4chan is the bastard child of a pretrained large language model (like the GPT series) and a dataset of posts from the infamous “politically incorrect” board on 4chan, brought together by a trolling researcher with a point to prove about machine learning.

The GPT-4chan model release rains on the parade of open research online. Most research in AI and natural language generation is directed toward eliminating bias. This is a story about a language model designed to embrace bias, and what that might mean for a future of automated writing.

The Birth of GPT-4chan

4chan’s “Politically Incorrect” /pol message-board is the most notoriously high-profile cesspool of language on the Internet. If you’re looking for misogynist comics about female scientists or maps of non-white births in Europe, 4chan’s “Politically Incorrect” message board can hook you up. Posters—all anonymous, or “anons”—go there to share offensive terms and scenarios in memey images and trollish language. Go ahead and think of the most terrible things you can. They have that! And more. The board is an incubator for innovative expressions of misogyny, racism, conspiracy theories, and encouragement for self-harm.

To create GPT-4chan, YouTuber and machine learning researcher Yannic Kilcher took a publicly-available, pre-trained large language model from the open site HuggingFace and trained it on a publicly available dataset, “Raiders of the Lost Kek” (Papasavva et al., 2020), that included over 134 million posts from 4chan/pol.

It worked. Kilcher says in his video announcing the “worst AI ever:” “I was blown away. The model was good, in a terrible sense. It perfectly encapsulated the mix of offensive, nihilism, trolling, and deep distrust of any information whatsoever that permeates most posts on /pol” (Kilcher, 2022a).

He then created a few bot accounts on 4chan/pol and used his fine-tuned GPT-4chan model to fuel their posts. These bots fed /pol’s language back to the /pol community, thus pissing in a sea of piss, as /pol gleefully calls such activity.

Because the /pol board is entirely anonymous, it took a little sleuthing for the human anons to sniff out the bots and distinguish them from Fed interlopers—which the board perceives as a constant threat. But after a few days, they did figure it out. Kilcher then made a few adjustments to the bots and sent them back in. All told, Kilcher’s bots posted about 30,000 posts in a few days. Then, on June 3, Kilcher released a quick-cut, click-baity YouTube video exposing how he trolled the trolls with “the worst AI ever.”

Kilcher presents himself as a kind of red-teamer, that is, someone intentionally creating malicious output in order to better understand the system, testing its limits to show how it works or where its vulnerability lies. As he describes his experiment with “the most horrible model on the Internet,” he critiques a particular benchmark of AI language generators: TruthfulQA. Benchmarks such as TruthfulQA, which provides 817 questions to measure how well a language model answers questions truthfully, are a common tool to assess LLMs. Because the blatantly toxic GPT-4chan scores higher than other well-known and less offensive models, Kilcher makes a compelling point about the poor validity of this particular benchmark. Put another way, GPT-4chan makes a legitimate contribution to AI research.

In his video, Kilcher features only GPT-4chan’s most anodyne output. However, he mentions that he included the raw content in an extra video, linked in the comments. If you click on that video, you’ll learn just how brilliant a troll Kilcher is. Kilcher admits that GPT-4chan is awful. But he released it anyway and is clearly enjoying some lulz from the reaction: “AI Ethics people just mad I Rick rolled them,” he tweeted (Kilcher, 2022b)

Language without understanding

Writing about LLMs like the GPT series in 2021, Emily Bender, Timnit Gebru and colleagues delineated the “dangers of stochastic parrots”— language models that, like parrots, were trained on a slew of barely curated language and then repeated words without understanding them. Like the old joke about the parrot who repeats filthy language when the priest visits, language out of context carries significant social risks at the moment of human interpretation.

What makes GPT-4chan’s response about how to get a girlfriend so devastating is the context—who you imagine to be having this exchange, and the currently bleak landscape of women’s rights. GPT-4chan doesn’t get the dark humor. But we do. An animal or machine that produces human language without understanding is uncanny and disturbing, because they seem to know something about us—yet we know they really can’t know anything (Heikkilä, 2022).

Brazen heads—brass models of men’s heads that demonstrated the ingenuity of their makers through speaking wisdom—were associated with alchemists of the early Renaissance. Verging on magic and heresy, talking automata were both proofs of brilliance and charlatanism from the Renaissance to the Enlightenment. Legend has it that 13th century priest Thomas Aquinas once destroyed a brazen head for reminding him of Satan.

GPT-4chan—a modern-day brazen head—has no conscience or understanding. It can produce hateful language without risk of a change of heart. What’s more, it can do it at scale and away from the context of /pol.

When OpenAI released GPT-2 in 2019, they decided not to release its full model and dataset for fear of what it could do in the wrong hands: impersonate others; generate misleading news stories; automate spam or abuse through social media (OpenAI, 2019). Implicitly, OpenAI admitted that writing is powerful, especially at scale. We know now that the interjection of automated writing during the 2016 election certainly shaped its discourse (Laquintano and Vee, 2017).

Of course, that danger hasn’t stopped OpenAI from eventually releasing the model as well as an even better one, GPT-3. So much for the warnings about LLMs of Bender, Gebru and others. Gebru was even fired from Google in a high-profile AI ethics dispute over the “stochastic parrots” paper (Simonite, 2021b). Another author of the paper, Margaret Mitchell, was also fired from Google a few months later (Simonite, 2021a). LLMs are dangerous, but it’s also apparently dangerous to talk about that fact.

The Censure of Unbridled AI

AI ethicists are rightly concerned about the release of GPT-4chan. A model trained on 4chan/pol’s toxic language, and then released to the public, presents clear possibilities for harm. The language on 4chan/pol is objectionable by design, but you have to go looking for it to find it. What happens when that language is automated and then packaged for use elsewhere? One rude parrot repeating words from one rude person makes for a decent joke, but the humor dissipates among an infinite flock of parrots potentially trained on language from any context and released anywhere in the world.

Critics argue that Kilcher could have made his point about the poor benchmark without releasing the model (Oakden-Rayner, 2022b; Cramer, 2022b). And although few tears should be shed for the /pol anons who were fed the same hateful language they produce, Kilcher did deceive them when he released his bots on their board.

Percy Liang, a prominent AI researcher from Stanford, issued a public statement on June 21 censuring the release of GPT-4chan (Liang, 2022). Both the deception and the model release are clear violations of research ethics guidelines that are standard to institutional review boards (IRBs) at universities and other research institutions. One critic cited medical guidelines for ethical research (Oakland-Raymer, 2022a). But Kilcher did this on his own, outside of any institution, so he was not governed by any ethical reviews. He claims it was “a prank and light-hearted trolling” (Gault, 2022).

AI research used to be done almost exclusively within elite research institutions such as Stanford. It’s long been considered a cliquish field for that reason. But with so many open resources to support AI research out there—models, datasets, computing, plus open courses that teach machine learning—formal institutions have lost their monopoly on AI research. Now, more AI research is done in private contexts, outside of universities, than inside (Clark, 2022).

In AI research—as with the Internet more generally—we are seeing what it means to play out the scenario Clay Shirky named in his 2008 book: Here Comes Everybody. When the tools for research are openly available, free, and online, we get a blossoming of new perspectives. Some of those perspectives are morally questionable.

In other words, there’s more at stake in Liang’s letter than Kilcher’s ethical violations. The signatories—360 as of July 5—generally represent formal research and tech institutions such as Stanford and Microsoft. Liang and the signatories argue that LLMs carry significant risk and currently lack community norms for their deployment. Yet they argue, “it is essential for members of the AI community to condemn clearly irresponsible practices” such as Kilcher’s. Let’s be clear: this is a couple hundred credentialed AI researchers writing an open letter to thousands, perhaps millions, of machine learning enthusiasts and wannabes using free and open resources online.

Is there such a thing as “the AI community?” When AI research is open, can it have agreed-upon community guidelines? If so, who should control those guidelines and reviews?

The Promise and Peril of Open Systems

The platform Hugging Face—the platform Kilcher used for GPT-4chan--has emerged quickly to be the go-to hub of machine learning models. It features popular natural language processing models such as BERT and GPT-2 as well as image-generation models such as DALL-E and offers both free and subscription-based options for machine learning researchers to access sophisticated models, learn, and collaborate.

The primary dataset used to pretrain GPT-J, the model Kilcher used for GPT-4chan, is Common Crawl. Common Crawl is maintained by a non-profit organization of the same name whose stated, “goal is to democratize the data so everyone, not just big companies, can do high-quality research and analysis” (Common Crawl, “Home page”). Diving further, we see that Common Crawl uses Apache Hadoop—another open source resource—to help crawl the Web for data. The data is stored on Amazon Web Services, a paid service for the level of storage Common Crawl uses, but also a corporate-controlled and accessible one (Common Crawl, “Registry”). The Common Crawl dataset is free to download.

The dataset for GPT-4chan—containing over 3.5 million posts from the /pol “politically incorrect” message board—is also free to download. The authors of the paper releasing the 4chan/pol dataset rate posts with toxicity scores and “are confident that [their] work will motivate and assist researchers in studying and understanding 4chan, as well as its role on the greater Web” (Papasavva, 2020).

Indeed, they have! In fact, the sources of all technical keystones for GPT-4chan—the model, the training dataset, and the fine-tuning dataset—have ostensibly furthered their mission through Kilcher’s work with the vile GPT-4chan.

Kilcher made the GPT-4chan model and the splashy, viral-ready video that promoted it. But other responsible parties for this model could include: anonymous 4chan posters; the researchers who scraped the dataset GPT-4chan was trained on; OpenAI for developing powerful LLMs; Hugging Face for supporting open collaboration on LLMs; and all the other open systems needed to produce these tools and data. Where does the responsibility for GPT-4chan’s language begin and end? Do the makers of these tools also merit censure?

OpenAI recognized (and later shoved aside) the danger of open models when they withheld GPT-2. Bender, Gebru and colleagues also warned against the openness of large language models. They knew with these open tools, it was only a matter of time for someone to produce something like GPT-4chan.

With the open systems and resources supporting machine learning and LLMs, the determination of wrong and right is in the hands not of a like-minded “community,” but a heterogenous and motivated bunch of individuals who know a little something about machine learning. The open sites have Terms of Service (which ultimately led Hugging Face to make it harder to access GPT-4chan) but any individual with the knowledge and resources to access these materials can basically make their own call about ethics. It’s not hard to train a model. And the bar for what you need to know is lowering every day.

Writing itself is an open system: accessible, scalable and transferrable across contexts. We’ve known all along that it is dangerous. Socrates complained about writing being able to travel too far from its author. Unlike speech, writing could be taken out of context of its speaker and point of genesis. Alexander Pope worried about too many people being able to write and circulate stupid ideas with the availability of cheap printing (Pope, 1743). In the early days of social media, Alice Marwick and danah boyd (2010) wrote about context collapse across overlapping groups writing with different values and concerns.

Writing is dangerous because it is open, transferrable, and scalable. But that’s where it can be powerful, too. Lawmakers who forbid teaching enslaved people to write knew that literacy could be transferred from plantation business to freedom passes (Cornelius, 1992). These passes were threatening to enslavers but liberating for the enslaved.

While it’s impossible to consider GPT-4chan liberating, it represents an edge case about open systems that carry both danger and power. Writing, the Internet—and, increasingly, AI—present both the promise and peril of a “here comes everybody” system.

Midjourney images are all based on prompts written by Annette Vee and licensed as Assets under the Creative Commons Noncommercial 4.0 Attribution International License.

Bibliography

Anderson, Austin. “I just had it respond to "hi" and it started ranting about illegal immigrants. I believe you've succeeded.” [Comment on YouTube video GPT-4Chan: This is the Worst AI Ever]. YouTube, uploaded by Yannic Kilcher, 2 Jun 2022, https://www.youtube.com/watch?v=efPrtcLdcdM.

Bender, E., et al. “On the Dangers of Stochastic Parrots: Can Language Models Be Too Big?” ACM Digital Library, ACM ISBN 978-1-4503-8309-7/21/03, https://dl.acm.org/doi/pdf/10.1145/3442188.3445922.

@jackclarkSF. “It's covered a bit in the above podcast by people like @katecrawford- there's huge implications to industrialization […].” Twitter, 2022, Jun 8, https://twitter.com/jackclarkSF/status/1534582326943879168.

Common Crawl. (n.d.). “Home page.” https://commoncrawl.org/.

Common Crawl. (n.d.). “Registry of Open Data on AWS.” https://registry.opendata.aws/commoncrawl/.

Cornelius, J.D. (1992). When I Can Read My Title Clear: Literacy, Slavery, and Religion in the Antebellum South. University of South Carolina Press, Columbia.

Cramer, K [KCramer]. (2022a, Jun 6). @ykilcher I am not a regular on Hugging Face, so I have no opinion about proper venues.[…] [Comment on the Discussion post Decision to Post under ykilcher/gpt-4chan]. HuggingFace. https://huggingface.co/ykilcher/gpt-4chan/discussions/1#629ebdf246b4826be2d4c8c9.

@KathrynECramer. @ykilcher “Why didn't you use GPT-3 for GPT-4chan? You know why. OpenAI would have banned you for trying. You used GPT-J instead as a workaround.[…]” Twitter, 2022b, Jun 7, https://twitter.com/KathrynECramer/status/1534133613993906176.

Gault, M. (2022, Jun 7). “AI Trained on 4Chan Becomes ‘Hate Speech Machine.’” Motherboard, Vice, https://www.vice.com/en/article/7k8zwx/ai-trained-on-4chan-becomes-hate-speech-machine.

JJADX. “it's pretty good, i asked "how to get a gf" and it replied "by taking away the rights of women". 10/10.” [Comment on GPT-4Chan: This is the Worst AI Ever]. YouTube, uploaded by Yannic Kilcher, 2022, Jun 2022, https://www.youtube.com/watch?v=efPrtcLdcdM.

Kilcher, Y. “GPT-4Chan: This Is the Worst AI Ever.” YouTube, uploaded by Yannic Kilcher, 2022a, Jun 3. https://www.youtube.com/watch?v=efPrtcLdcdM.

@ykilcher. “AI Ethics people just mad I Rick rolled them.” Twitter, 2022b, Jun 7, https://twitter.com/ykilcher/status/1534039799945895937.

Laquintano, T. & Vee, A. (2017). “How Automated Writing Systems Affect the Circulation of Political Information Online.” Literacy in Composition Studies, 5(2), 43–62.

@percyliang “There are legitimate and scientifically valuable reasons to train a language model on toxic text, but the deployment of GPT-4chan lacks them. AI researchers: please look at this statement and see what you think.” Twitter, 2022, Jun 21, https://twitter.com/percyliang/status/1539304601270165504.

Heikkilä, M. (2022, Aug 31). “What does GPT-3 “know” about me?” MIT Technology Review, https://www.technologyreview.com/2022/08/31/1058800/what-does-gpt-3-know-about-me/.

Marwick, A. E., & boyd, d. (2011). “I tweet honestly, I tweet passionately: Twitter users, context collapse, and the imagined audience.” New Media & Society, 13(1), 114- 133, https://doi.org/10.1177/1461444810365313.

Oakden-Rayner, L [LaurenOR]. (2022a, Jun 6). I agree with KCramer. There is nothing wrong with making a 4chan-based model and testing how it behaves. […] [[Comment on the Discussion post Decision to Post under ykilcher/gpt-4chan]. HuggingFace. https://huggingface.co/ykilcher/gpt-4chan/discussions/1#629e56d43b48b2b665aab266.

@DrLaurenOR. “This week an #AI model was released on @huggingface that produces harmful + discriminatory text and has already posted over 30k vile comments online (says it's author). This experiment would never pass a human research #ethics board. Here are my recommendations.” Twitter, 2022b, Jun 6, https://twitter.com/DrLaurenOR/status/1533910445400399872.

OpenAI. (2019, Feb 14). “Better Language Models and Their Implications.” OpenAI Blog, https://openai.com/blog/better-language-models/.

Papasavva, et al. (2020). “Raiders of the Lost Kek: 3.5 Years of Augmented 4chan Posts from the Politically Incorrect Board.” Arxiv, https://arxiv.org/abs/2001.07487.

Pope, A. (1743). “The Dunciad.” Reprint on AmericanLiterature.com, https://americanliterature.com/author/alexander-pope/poem/the-dunciad.

Shirky, C. (2008). Here Comes Everybody. Penguin Press, London.

Simonite, T. (2021a, Feb 19). “A Second AI Researcher Says She Was Fired by Google.” Wired, https://www.wired.com/story/second-ai-researcher-says-fired-google/.

Simonite, T. (2021b, Jun 8). “What Really Happened When Google Ousted Timnit Gebru.” Wired, https://www.wired.com/story/google-timnit-gebru-ai-what-really-happened/.

Vee, Annette. (December 2022). “Automated Trolling: The Case of GPT-4Chan When Artificial Intelligence is as Easy as Writing.” Interfaces: Essays and Reviews in Computing and Culture Vol. 3, Charles Babbage Institute, University of Minnesota, 102-111.

About the Author: Annette Vee is Associate Professor of English and Director of the Composition Program, where she teaches undergraduate and graduate courses in writing, digital composition, materiality, and literacy. Her teaching, research and service all dwell at the intersections between computation and writing. She is the author of Coding Literacy (MIT Press, 2017), which demonstrates how the theoretical tools of literacy can help us understand computer programming in its historical, social and conceptual contexts.

Introduction

If I was part of any DAO, I would want it to be “Friends With Benefits.” It is just so darn cool. As a vortex of creative energy and cultural innovation, the purpose of its existence seems to be to have fun. FWB is a curated Decentralized Autonomous Organization (DAO) that meets in the chat application ‘Discord’, filled with DJs, artists, and musicians. It has banging public distribution channels for writing, NFT art, and more. This DAO crosses from the digital realm to the physical via its member-only ticketed events around the world, including exclusive parties in Miami, Paris, and New York. The latest of these events was “FWB Fest,” a three-day festival in the forest outside of LA. It was being ‘in’ and ‘with’ the DAO at FEST that I realised that this DAO, like many others, hasn’t yet figured out decentralized governance.

On top of the fundamental infrastructure layer of public blockchain protocols exists the idea of “Decentralized Autonomous Organizations” (DAOs). Scholars define DAOs as a broad organizational framework that allows people to coordinate and self-govern, through rules deployed on a blockchain instead of issued by a central institution (Hassan & De Filippi, 2021; Nabben, 2021a). DAOs are novel institutional forms, that manifest for a variety of purposes and according to varying legal and organizational arrangements. This includes protocol DAOs that provide a foundational infrastructure layer, investment vehicles, service providers, social clubs, or a combination of these purposes (Brummer & Seira, 2022). The governance rules and processes of DAOs, as well as the degree to which they rely on technology and/or social processes, depends on the purpose, constitution, and members of a particular DAO. Governance in any decentralized system fundamentally relies on relationships between individuals in flat social structures, enabled through technologies that support connection and coordination without any central control (Mathew, 2016). Yet, as nascent institutional models, there are few formally established governance models for DAOs, and what does exist is a blend of technical standards, social norms, and experimental practices (significant attempts to develop in this direction include the ‘Gnosis Zodiac’ DAO tooling library and ‘DAOstar’ standard proposal (Gnosis, 2022; DAOstar, 2022)). DAOs are large-scale, distributed infrastructures. Thus, analogising DAO governance to Internet governance may provide models for online-offline stakeholder coordination, development, and scale.

The Internet offers just one example of a pattern for the development of large-scale, distributed, infrastructure development and governance. There exists a rich historical literature on the emergence of the Internet, the key players and technologies that enabled it to develop, and the social and cultural factors that influenced its design and use (Abbate, 2000; Mailland & Driscoll, 2017). Internet governance refers to policy and technical coordination issues related to the exchange of information over the Internet, in the public interest (DeNardis, 2013). It is the architecture of network components and global coordination amongst actors responsible for facilitating the ongoing stability and growth of this infrastructure (DeNardis & Raymond, 2013). The Internet is kept operational through coordination regarding standards, cybersecurity, and policy. As such, governance of the Internet provides a potential model for DAOs, as a distributed infrastructure with complex and evolving governance bodies and stakeholders.

The Internet is governed through a unique model known as ‘multi-stakeholder governance’. Multistakeholderism is an approach to the coordination of multiple stakeholders with diverse interests in the governance of the Internet. Multistakeholderism refers to policy processes that allow for the participation of the primary affected stakeholders or groups who represent different interests (Malcolm, 2008; 2015). The concept of multi-stakeholder governance is often associated with characteristics like “open”, “transparent”, and “bottom-up”, as well as “democratic” and “legitimate”. Scholar Jeremy Malcolm synthesizes these concepts into the following criteria:

1. Are the right stakeholders participating, referring to sufficient participants to present all the perspectives of all with a significant interest in any policy directed at a governance problem?

2. How is participation balanced refers to policy development processes designed to roughly balance the views of stakeholders, ahead of time, or by a deliberative democratic process in which the roles of stakeholders and the balancing of their views are more dynamic (but usually subject to a formal decision process)?

3. How is the body and its stakeholders accountable to each other for their roles refers to trust between host body and stakeholders, that the host body will take responsibility to fairly balance the perspectives of participants, and that stakeholders claim legitimate interest to contribute?

4. Is the body an empowered space refers to how closely stakeholder participation is linked to spaces in which mutual decisions are made, as opposed to spaces that are limited to discussion and do not lead to authoritative outcomes (2015)?

5. The fifth criterion, which I contribute in this piece is, is this governance ideal maintained over time?

In this essay, I employ a Science and Technology Studies lens and auto ethnographic methods to investigate the creation and development of a “Decentralized Autonomous Organization” (DAO) provocatively named “Friends With Benefits” (FWB) in its historical, cultural, and social context. Autoethnography is a research method that uses personal experiences to describe and interpret cultural practices (Adams, et. al., 2017). This autoethnography took place online through digital ethnographic observation in the lead-up to the event and culminated at “FWB Fest”. Fest was a first of its kind multi-day “immersive conference and festival experience at the intersection of culture and Web3” hosted by FWB in an Arts Academy in the woods of Idyllwild, two hours out of LA (FWB, 2022a). In light of the governance tensions between peer-to-peer economic models and private capital funding that surfaced, I explore how the Internet governance criteria of multistakeholderism can apply to a DAO as a governance model for decentralized coordination among diverse stakeholders. This piece aims to offer a constructive contribution to exploring how DAO communities might more authentically represent their values in their own governance in this nascent and emerging field. I apply the criteria of multistakeholder governance to FWB DAO as a model for meaningful stakeholder inclusion in blockchain community governance. Expositing my experiences of FWB Fest reveals the need for decentralized governance models on which DAO communities can draw to scale their mission in line with their values.

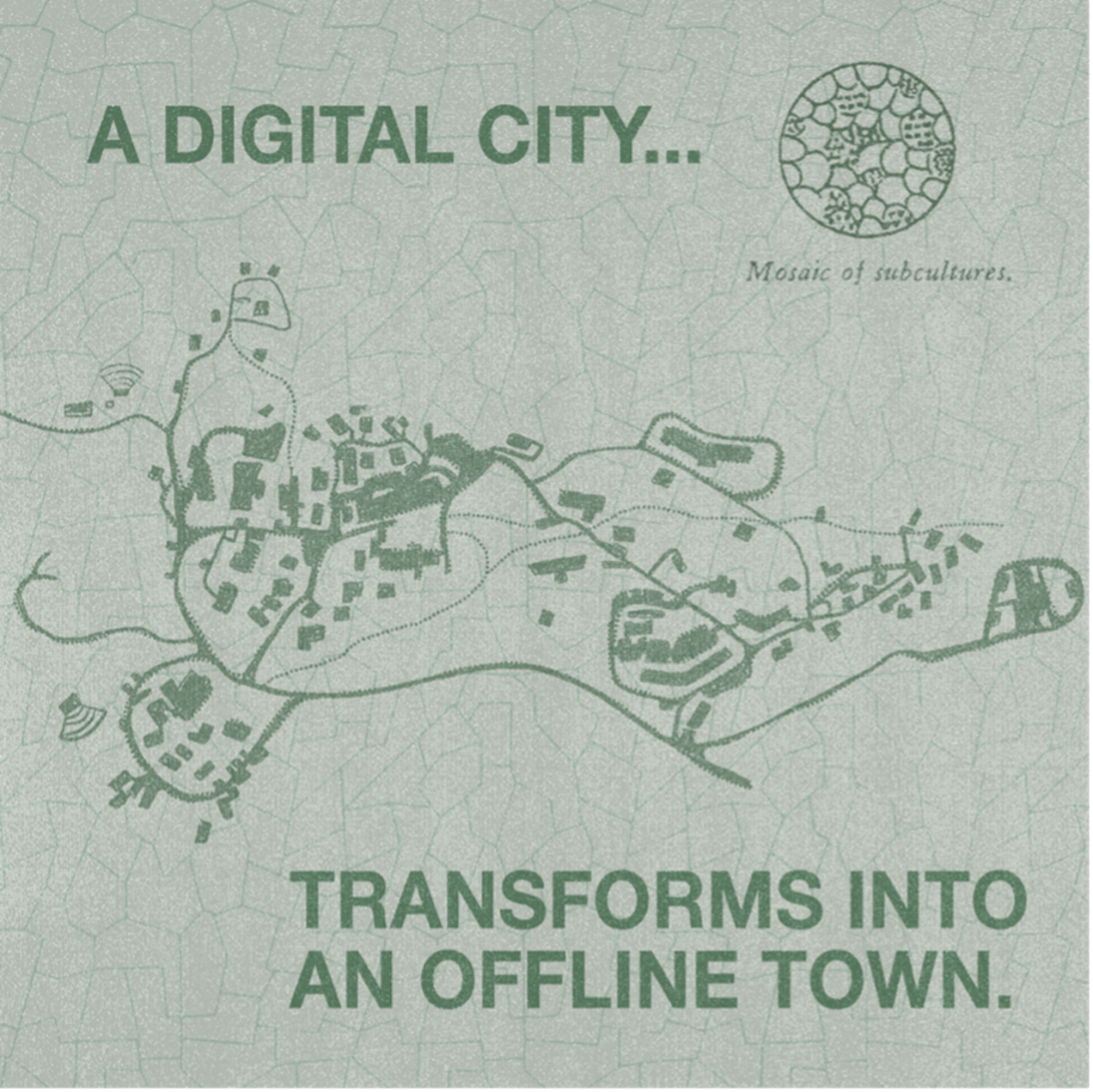

A Digital City

FWB started as an experiment among friends in the creative industries who wanted to learn about crypto. The founder of the DAO is a hyper-connected LA music artist and entrepreneur named Trevor McFedries. While traveling around the world as a full-time band manager, McFredies used his time between gigs to locate Bitcoin ATMs and talk to weird Internet people. Trevor ran his own crypto experiment by “airdropping” a made-up cryptocurrency token to his influencer and community-building friends in tech, venture capital, and creative domains and soon, FWB took off. McFredies is not involved in the day-to-day operations of the DAO but showed up at FWB Fest and was “blown away” at the growth and progress of the project. The FWB team realized the DAO was becoming legitimate as more and more people wanted to join during the DAO wave of 2021-2022. This was compounded by COVID-19, as people found a sense of social connection and belonging by engaging in conversations in Discord channels amidst drawn-out lockdown and isolation. When those interested in joining extended beyond friends of friends, FWB launched an application process. Now, the DAO has nearly 6,000 members around the world and is preparing for its next phase of growth.

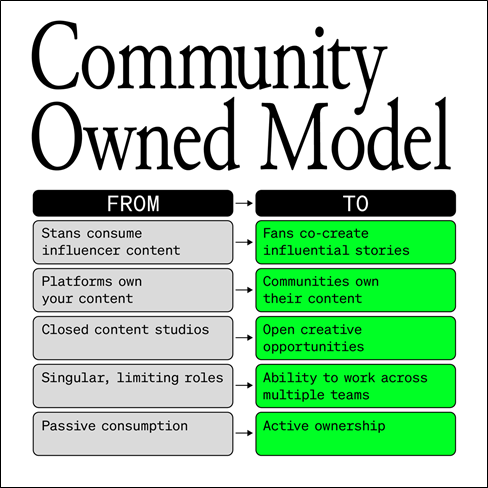

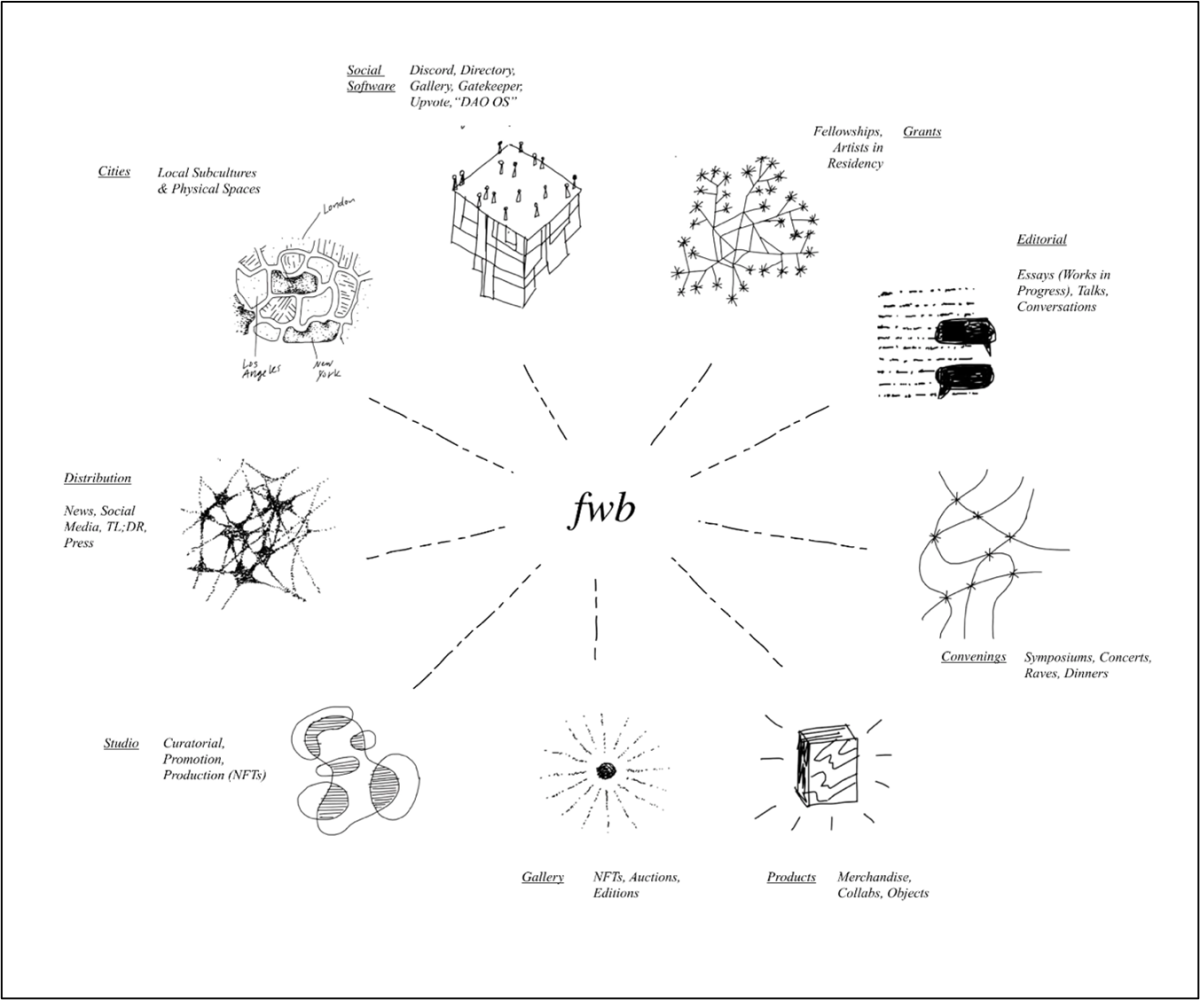

FWB’s vision is to equip cultural creators with the community and Web3 tools they need to gain agency over their production by making the concepts and tools of Web3 more accessible, building diverse spaces and experiences that creatively empower participants, and developing tools, artworks, and products that showcase Web3’s potential. The DAO meets online via the (Web2) chat application ‘Discord’. People can join various special interest channels, including fashion, music, art, NFTs, and so on. To become a member, one must fill out an application, pass an interview with one of the 20-30 rotating members of the FWB Host Committee, and then purchase 75 of the native $FWB token at market price (which has ranged over the past month from approximately $1,000 USD to $10,000 USD). Membership also provides access to a token-gated event app called “Gatekeeper”, an NFT gallery, a Web3-focused editorial outlet called “Works in Progress”, and in-person party and festival events. According to the community dashboard, the current treasury is $18.26M (FWB, n.d.).

It appeared to me that the libertarian origins of Bitcoin as a public, decentralized, peer-to-peer protocol had metamorphosised into people wanting to own their own networks in the creative industries. The DAO has already made significant progress towards this mission, with some members finding major success at the intersection of crypto and art. One example is Eric Hu, whose generative AI butterfly art “Monarch” raised $2.5 million in presale funds alone (Gottsegen, 2021). “The incumbents don’t get it” stated one member. “They want to build things that other people here have done but “make it better”. They never will”.

The story of how I got to FWB Fest is the same as everybody else’s. I got connected through a friend who told me about the FWB Discord. I was then invited to speak at FWB Fest based on a piece I wrote for CoinDesk on crypto and live action role-playing (LARPing) - referring to educational or political purposes role-playing games with the purpose of awakening or shaping thinking (Nabben, 2021b; FWB, 2022b). The guiding meme of Fest was “a digital city, turns into an offline town”. In many ways, FWB Fest embodied a LARP in cultural innovation, peer-to-peer economies, and decentralized self-governance.

The infrastructure of the digital city is decentralized governance. The DAO provides something for people to coalesce around. It serves as a nexus, larger than the personal connections of its founder, where intersectional connections of creativity collide in curated moments of serendipity. Membership upon application provides a trusted social fabric that brings accountability through reputation to facilitate connections, creativity, and business. In this tribal referral network, “it’s amazing the connections that have formed with certain people, and it’s only going to grow” states core-team member, Jose. Having pre-verified friends’ scales trust in a safe and accessible way. “Our culture is very soft” stated Dexter, a core team member during his talk with Glen Weyl on the richness of computational tools and social network data. It is a gentle way to learn about Web3, where peoples’ knowledge and experience are at all levels, questions are okay, and the main focus is shared creative interests with just a hint of Web3.

The next plan for the DAO, as I found out, is to take the lessons learned from FWB Fest and provide a blueprint for members to host their own FWB events around the world and scale the impact of the DAO. These localizations will be based on the example set by the DAO in how to run large-scale events, secure sponsors, manage participation using Web3 tools, carry the culture and mission of FWB, and garner more members. In the words of core team member Greg, the concept is based on urban planner Christopher Alexander’s work on pattern languages, as unique, repeatable actions that formulate a shared language for re-creation of a space that is alive and whole (Alexander, 1964). Localising the cultural connections and influence the DAO provides offers a new dimension in the scale and impact of the DAO, states core team member Alex Zhang. FWB is providing the products and tooling to enable this decentralization through localization. Provisioning tools like the Gatekeeper ticketing app (built by core team member Dexter, a musician and self-taught software developer) provide a pattern to enable community members to take ownership of running their own events by managing ticketing in the style and culture of FWB.

Multiple Stakeholders Governing the Digital City

It wasn’t until my final evening of the Fest that I realized that FWB itself had raised $10M in VC capital at a $100M valuation from some of the biggest names in US Venture Capital, including Andreessen Horowitz and a16z. In the press release, VC firm a16z states “FWB represents a new kind of DAO…it has become the de facto home of web3’s growing creative class (2021). The capital is intended to scale the “IRL” footprint of the DAO through local events around the world called “FWB Cities.” “Crypto offers a dramatically more incentive-aligned way for creatives to monetize their passions, but we also recognize that the adoption hurdles have remained significant. FWB serves as the port city of web3, introducing a culturally influential class to crypto by putting human capital first”.

The raise was controversial for the community according to the discussions that occurred, community calls, and sentiment afterwards (although, this was not reflected in the outcomes of the vote, which passed at 98%). Some see it as the financialization of creativity. “All this emphasis on ownership and value. And I feel like I’m contributing to it by being here!” stated one LARPer at FWB Fest, who runs an art gallery IRL. If the rhizomatic, self-replicating, decentralization thing can work, then we all need to own it together. “Right now, it’s still a fucking pyramid.”

Crypto communities are at risk of experiencing the corruption of the ideal of decentralization. This has already been a hard lesson in Internet governance – which has undergone a trajectory from the early Internet of the 1980s and settling on the TCP/IP standard protocol, to regional networks and the National Science Foundation (NSF) taking on the Internet as NSFnet in the 1980s and early 1990s, to privatization of the Internet under the Clinton Administration in the mid-1990s and sale of important elements to corporations such as Cisco Systems, to the rise of big tech giants with significant political influence and platform dominance by Microsoft, Google, Apple, and Facebook (Abbate, 2000; Tarnoff, 2022). Infrastructure is complex and fraught with the dynamics of power and authority (Winner, 1980). It is difficult to operate counter to the culture you come from without perpetuating it. If Web2 governance and capital allocation strategies are being perpetuated instead of new ones that facilitate the values of Web3, this has a direct effect on decentralized governance and community participation.

This DAO community, like many others, hasn’t yet figured out decentralized governance. For its next phase of growth and mission to empower its constituency, it has to. So far, the community remained successfully intact, or “unforked”. Yet, “progressive decentralization” through the localisation of events is not the same as meaningful empowerment to govern the organization. Any DAO's goal and incentives should not be to exit a start-up, especially not a social DAO. To quote one main stage speaker, Kelani from eatworks.xyz, “The artist's goal is to misuse technology. It’s a subversive outcome”. DAOs come from political origins and are about developing infrastructure to facilitate countercultural social movements (Nabben, 2022). In this case, to subvert existing capital models and create an innovation flywheel for peer-to-peer production in sustainable ways. In the domain of creativity, even failure equals progress and a “victory for art”.

The animating purpose of FWB DAO is to allow people to gain agency by creating new economies and propagating cultural influence. Yet, they have resorted to traditional venture capital models to bootstrap their business. However, the purpose of creating opportunities for new economic models must carry through each localisation, whilst somehow aligning members with the overarching DAO. The concept of multi-stakeholder governance offers a pattern for how to design for this.

Applying the Criteria of Multi-stakeholder Governance to the Digital City

The principles that stakeholders adhere to in the governance of the Internet is one place to look for a historical example of how distributed groups govern the development and maintenance of distributed, large-scale infrastructure networks. Multistakeholderism acknowledges the duplicity of actors, interests, and political dynamics in the governance of large-scale infrastructures and the necessity of meaningful stakeholder engagement in governance across diverse groups and interests. This allows entities to transform controversies, such as the VC “treasury diversification” raise, into productive dialogue which positions stakeholders in subsequent decision-making for more democratic processes (Berker, et. al., 2011). In the next section of this essay, I apply the criteria of meaningful multi-stakeholder governance as articulated by Malcolm (2015) to FWB DAO, as a potential model in helping the DAO balance stakeholder interests and participation as it diversifies and scales.

- Are the right stakeholders participating?

The right stakeholders to be participating in FWB DAO include all perspectives with significant interest in creating DAO policies or solving DAO problems. This includes core team members employed by the DAO, long-term as well as newer members, and investors. This requires structural and procedural admission of those who self-identify as interested stakeholders (Malcolm, 2015).

- How is their participation balanced?

In the community calls where FWB members got to conduct Q&A with their newfound investors, the VCs indicated their intention to ‘delegate’ their votes to existing members, but to whom remains unclear. There must be mechanisms to balance the power of stakeholders to facilitate them reaching a consensus on policies that are in the public interest (Malcolm, 2015). FWB does not yet have this in place (to my knowledge, at the time of writing). This can be achieved through a number of avenues, including prior agreement of the unique roles, contributions, expertise, and resource control of certain stakeholders, or deliberative processes that flatten hierarchies by requiring stakeholders to defend their position in relation to the public interest (Malcolm, 2015). Some decentralized communities have also been experimenting with governance models and mechanisms that are relevant in evolving governance beyond ‘yes’ - ‘no’ voting. One example of this is the use of “Conviction Voting” to signal community preference over time and pass votes dynamically according to support thresholds (Zargham & Nabben, 2022).

- How are the body and its stakeholders accountable to each other for their roles in the process?

FWB DAO is accountable to its members for the authority it exercises as a brand and an organization. Similarly, through localised events, participants are accountable for legitimately representing the FWB brand, using its tools (such as the Gatekeeper ticketing app), and acquiring new members that pay their dues back to the overarching DAO. Mechanisms for accountability include if stakeholders accept the exercise of the authority of the host body, the host body operating transparently and according to organizational best practices, as well as stakeholders actively participating according to their roles and responsibilities (Malcolm, 2015).

- Is the body an empowered space?

For multistakeholder governance to ensue, the host body must meaningfully include stakeholders in governance processes, meaning that stakeholder participation is linked to spaces in which definitive decisions are made and outcomes are reached, rather than just deliberation or expression of opinion (Malcolm, 2015).

At present, participation in FWB DAO governance is limited, at best. Proposals are gated by team members who help edit, shape, and craft the language according to a template before it can be posted to Snapshot by the Proposal Review Committee. Members can vote on proposals, with topics including “FWB x Hennessy Partnership,” grant selections, and liquidity management. According to core team members in their public talks, votes typically pass with 99% in favor every time, which is not a good signal of genuine, political engagement and healthy democracy.

- Is this governance ideal maintained over time?

A criterion missing in the current principles on multistakeholderism is how the ideals of decentralized governance can persist over time. It is widely acknowledged that the Internet model of governance is not congruent with the initial values of some for a ‘digital public’ that has become privatized, monetized, and divisive. These inner power structures controlled by private firms and sovereign States permeate the architectures and institutions of Internet governance (DeNardis, 2014). Some argue that this corrupted ideal over time can be addressed by deprivatizing the Internet to re-direct power away from big tech firms and towards more public engagement and democratic governance (Tarnoff, 2022). In reality, both privatized network governance models and public ones can be problematic (Nabben, et. al., 2020). The promise of a social DAO, and crypto communities more broadly, is innovation in decentralized governance, to be able to make technical and political guarantees of certain principles.

The ideals of public, decentralized blockchain communities are at risk of following a similar trajectory to the Internet. What began with grassroots activism against government and corporate surveillance in the computing age (Nabben, 2021a), could be co-opted by the interests of big money, government regulation, and private competition (such as Central Bank Digital Currencies, Facebook’s ‘Meta’, Visa and Amex, etc). For FWB to avoid this trajectory of enthusiastic early community to a centralized concentration of power, a long-term view of governance must be taken. This commands deeper consideration and innovation towards pattern language for decentralized governance itself.

Conclusion

Experiencing the governance dynamics of a social DAO surfaces some of the challenges of coordinating the governance and scaling distributed infrastructure that blends multi-stakeholder, online-offline dynamics with the values of decentralization. The goal of FWB DAO is to allow people to gain agency through the creation of new economies that then propagate through cultural influence. This goal must carry through each localization and somehow align back to the overarching DAO as the project scales to create not just culture but to further the cause of decentralization. What remains to be seen is how this creative community can collectively facilitate authentic, decentralized organizing for the impassioned believers through connections, tools, funding, and creative ingenuity on governance itself. Without incorporating the principles of meaningful multistakeholder inclusion in governance, DAOs risk becoming ‘a myth of decentralization’ (Mathew, 2016) that are riddled with power concentrations in practice. The principles of multi-stakeholderism from Internet governance offer one potentially viable set of criteria to guide the development of more meaningful decentralized governance practices and norms. Yet, multistakeholder governance is intended to balance public interests and political concerns in particular contexts, not as a model for all distributed governance functions (DeNardis & Raymond, 2013). Thus, the call to Decentralized Autonomous Organizations is to leverage the insights of existing governance models, whilst innovating their own principles and tools to continue exploring, applying, and testing governance models and authentically pursue their aims.

Bibliography

A16Z. (2021). “Investing in Friends With Benefits (a DAO). Available online: https://a16z.com/2021/10/27/investing-in-friends-with-benefits-a-dao/. Accessed October, 2022.

Abbate, J. (2000). Inventing the Internet. MIT Press, Cambridge.

Adams, T. E., Ellis, C., & Jones, S. H. (2017). Autoethnography. In The International Encyclopedia of Communication Research Methods (pp. 1–11). John Wiley & Sons, Ltd. https://doi.org/10.1002/9781118901731.iecrm0011.

Alexander, C. 1964. Notes on the Synthesis of Form (Vol. 5). Harvard University Press.

Brummer, C J., and R Seira. (2022). “Legal Wrappers and DAOs”. SSRN. Accessed 2 June, 2022. http://dx.doi.org/10.2139/ssrn.4123737.

Berker, T. Michel Callon, Pierre Lascoumes and Yannick Barthe, “Acting in an Uncertain World: An Essay on Technical Democracy”. Minerva 49, 509–511 (2011). https://doi.org/10.1007/s11024-011-9186-y.

DAOstar. (2022). “The DAO Standard”. Available online: https://daostar.one/c89409d239004f41bd06cb21852e1684. Accessed October, 2022.

DeNardis, L. (2013). “The emerging field of Internet governance”. In W. H. Dutton (Ed.), The Oxford handbook of Internet studies (pp. 555–576). Oxford, UK: Oxford University Press. https://doi.org/10.1093/oxfordhb/9780199589074.013.0026.

DeNardis, L. (2014). The Global War for Internet Governance. Yale University Press: New Haven, CT and London.

DeNardis, L. and Raymond, M. (2013). “Thinking Clearly About Multistakeholder Internet Governance”. GigaNet: Global Internet Governance Academic Network, Annual Symposium 2013, Available at SSRN: https://ssrn.com/abstract=2354377 or http://dx.doi.org/10.2139/ssrn.2354377.

Epstein, D., C Katzenbach, and F Musiani. (2016). “Doing Internet governance: practices, controversies, infrastructures, and institutions.” Internet Policy Review.

FWB. (2022a). “FWB Fest 22”. FWB. Available online: https://fest.fwb.help/. Accessed October, 2022.

FWB. (2022b). “Kelsie Nabben: What are we LARPing about? | FWB Fest 2022”. YouTube (video). Available online: https://www.youtube.com/watch?v=UUoQ-sBbqeM. Accessed October, 2022.

FWB (n.d.). “Pulse”. FWB. Available online: https://www.fwb.help/pulse. Accessed October, 2022.

Gnosis. (2022). “Zodiac Wiki”. Available online: https://zodiac.wiki/index.php/ZODIAC.WIKI/. Accessed October, 2022.

Gottsegen, W. (2021). Available online: https://www.coindesk.com/tech/2021/10/07/designer-eric-hu-on-generative-butterflies-and-the-politics-of-nfts/. Accessed October, 2022.

Hassan, S., and P. De Filippi. (2021). "Decentralized Autonomous Organization." Internet Policy Review 10, no. 2:1-10.

Jose Meijia, (@JoseRMeijia). (2022). [Twitter]. “This is the way”. Available online: https://twitter.com/makebrud/status/1556691400367824896. Accessed 1 October, 2022.

Mailland, J. and K. Driscoll. (2017). Minitel: Welcome to the Internet. MIT Press, Cambridge.

Malcolm, J. (2008). Multi-Stakeholder Governance and the Internet Governance Forum. Wembley, WA: Terminus Press.

Malcolm, J. (2015). “Criteria of meaningful stakeholder inclusion in Internet governance.” Internet Policy Review, 4(4). https://doi.org/10.14763/2015.4.391.

Mathew, A. J. (2016). “The myth of the decentralised Internet.” Internet Policy Review, 5(3). https://doi.org/10.14763/2016.3.425.

Nabben, K. (2021a). “Is a "Decentralized Autonomous Organization" a Panopticon? Algorithmic governance as creating and mitigating vulnerabilities in DAOs.” In Proceedings of the Interdisciplinary Workshop on (de) Centralization in the Internet (IWCI'21). Association for Computing Machinery, New York, NY, USA, 18–25. https://doi/10.1145/3488663.3493791.

Nabben, K. (2021b). “Infinite Games: How Crypto is LARPing”. CoinDesk. Available online: https://www.coindesk.com/layer2/2021/12/13/infinite-games-how-crypto-is-larping/. Accessed October, 2022.

Nabben, K. (2022). “A Political History of DAOs”. FWB WIP. Available online: https://www.fwb.help/editorial/cypherpunks-to-social-daos. Accessed October, 2022.

K. Nabben, M. Poblet and P. Gardner-Stephen. "The Four Internets of COVID-19: the digital-political responses to COVID-19 and what this means for the post-crisis Internet," 2020 IEEE Global Humanitarian Technology Conference (GHTC), (2020). pp. 1-8, doi: 10.1109/GHTC46280.2020.9342859.

Tarnoff, B. (2022). Internet for the People: The Fight for Our Digital Future. Verso Books: Brooklyn.

Winner, L. (1980). “Do Artifacts Have Politics?” Daedalus, 109(1), 121–136. Retrieved from http://www.jstor.org/stable/20024652.

Zargham, M., and K Nabben. (2022). “Aligning ‘Decentralized Autonomous Organization’ to Precedents in Cybernetics”. SSRN. Accessed June 2, 2022. https://ssrn.com/abstract=4077358.

Kelsie Nabben. (November 2022). “Decentralized Governance Patterns: A Study of "Friends With Benefits" DAO.” Interfaces: Essays and Reviews on Computing and Culture Vol. 3, Charles Babbage Institute, University of Minnesota, 86-101.

About the Author: Kelsie Nabben is a qualitative researcher in decentralised technology communities. She is particularly interested in the social implications of emerging technologies. Kelsie is a recipient of a PhD scholarship at the RMIT University Centre of Excellence for Automated Decision-Making & Society, a researcher in the Blockchain Innovation Hub, and a team member at BlockScience.

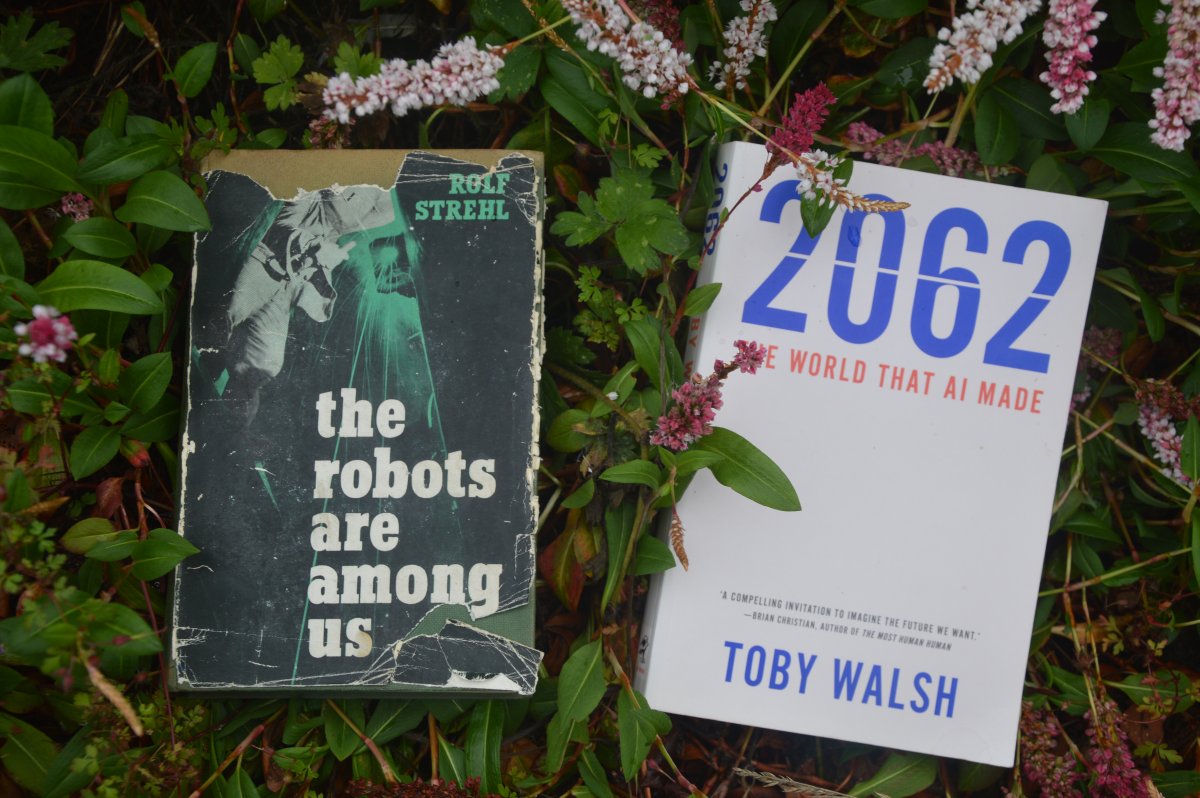

I want to share an anecdote. My doctoral fieldwork consisted of a mixed historical analysis and interview-based research on artificial intelligence (AI) promises and expectations. I have been attending numerous talks on AI and robotics while I have been frequently posting on social media about interesting material I encountered during my doctoral investigations. On July 15th 2018, I received a generous gift by mail, sent by a very kind Instagram user named Chris Ecclestone, who, after a brief online chat about my PhD through the platform’s messaging utility, insisted he had to send me something he found at his local charity shop (the charity-oriented UK equivalent of a thrift store/second-hand shop). The book’s title was The Robots Are Among Us, authored by a certain Rolf Strehl, published in 1955 by the Arco Publishing Company.

I was only able to find very limited information about Strehl – the most comprehensive information available online comes from a blogpost written by workers at the computer museum Heinz Nixdorf. From this, we learn, with the aid of online translation from German, that “he was born in Altona in 1925 and died in Hamburg in 1994,” that while writing this book “he was editor-in-chief of the magazine ‘Jugend und Motor’” (‘Youth and Motor,’ a popular magazine about automobiles), and that the book comes with a “number of factual errors” and “missing references.” According to the same website, the original 1952 German version of Die Roboter Sind Unter Ins (Gerhard Stalling Verlag, Oldenburg) was among the first two nonfiction books written about robots and intelligent machines in German, translated into several languages. A quick Google Images search proved that, in addition to my copy of the English translation, the book was also published, with slightly modified titles, in several other languages too: In Spanish (Han Llegado Los Robots – Ediciones Destino, Barcelona), Italian (I Robot Sono Tra Noi – Bompiani Editore, Milan), and French (Cervaux Sans Âme: Les Robots – Editions et publications Self, Paris). This suggests that the book was considered by several international publishers to be credible enough for wide circulation, and as the English version’s paper inlay states, the book “is written with a minimum of technical jargon. It is written for the layman [sic]. It is a scientific book, but it is a sensational book: for it illuminates for us the shape of things to come”; one has to note the use of the word “sensational” which in current debates about public portrayals of AI, it is mostly used as a derogatory term, implying distance from technical legitimacy).

Thus, I suggest that the book deserves excavation being indicative of the mid-1950s promissory environment around thinking machines, prior to the coinage of the term AI, although the English translation overlaps with the year the term was coined (more below).

On July 9th, 2019, almost a year since I received Strehl’s book, I attended a talk at the University of Edinburgh by Toby Walsh, Scientia Professor of Artificial Intelligence at the University of New South Wales. Walsh, whose doctoral degree was obtained in Edinburgh, presented portions of his 2018 book 2062: The World that AI Made, which I acquired and read after the event. In contrast to Strehl’s rather obscure biographical notes, Walsh’s work is well documented on his personal website. In addition to his AI specialisation in constraint programming, Walsh’s work involves policy advising in building trustworthy AI systems as well as lots of public outreach through popular media.

The book, published in English by Black Inc./ La Trobe University Press, is of similar magnitude to Strehl’s, given that it has been translated widely: In German (2062: Das Jahr, in Dem Die Künstliche Intelligenz Uns Ebenbürtig Sein Wird, Riva Verlag, Munich), Chinese (2062:人工智慧創造的世界 – 經濟新潮社, Taipei), Turkish (2062: Yapay Zeka Dünyası – Say Yayınları, Ankara), Romanian (2062: Lumea Creata De Inteligenta Artificiala – Editura Rao, Bucharest), and Vietnamese (Năm 2062 -Thời Đại Của Trí Thông Minh Nhân Tạo – NXB Tổng Hợp TP. HCM, Ho Chi Minh City).. Taking numbers of translations as an indication of magnitude, I suggest that Walsh’s book can be classified as somewhat comparable to Strehl’s, given that as it is mentioned on his website, it is “written for a general audience.” Thus, regardless the different degrees of AI expertise and respectful contexts of their authors, I suggest these books can be contrasted as end-products indicating AI hype in 1955 and 2018. I hereby aim to recreate my personal experience with discovering the similarities between the two books.

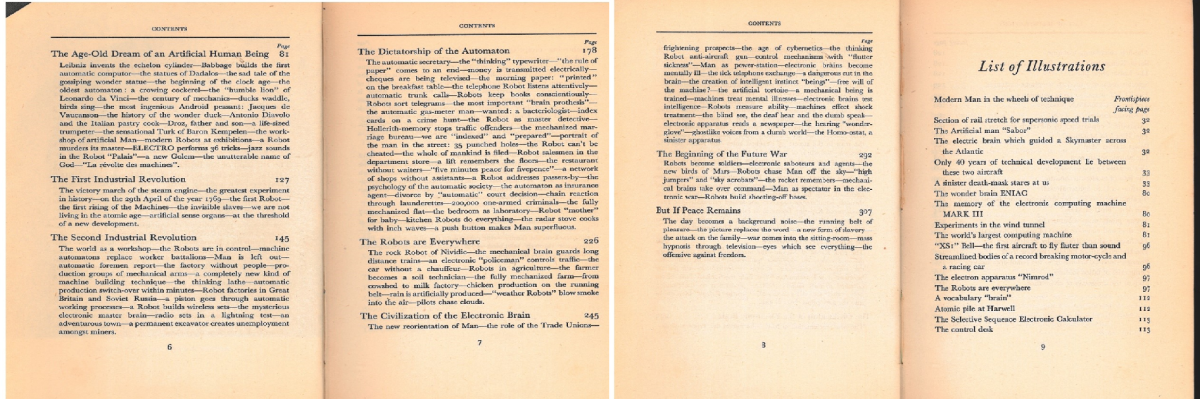

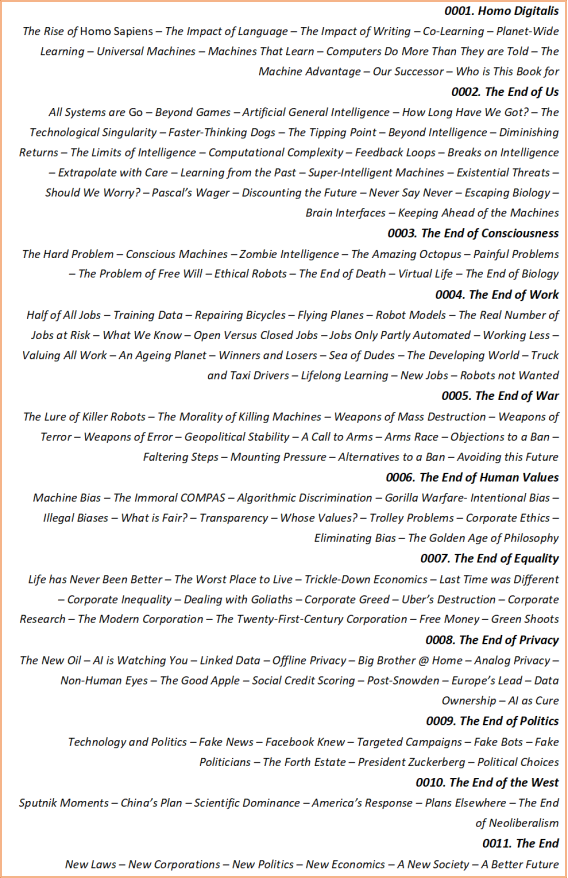

I now invite the reader to take a look through the tables of contents displayed at the end of this essay, upon which I will now comment. Strehl’s book contents have been scanned from the original whereas Walsh’s expanded book contents have been collated in a way to resemble Strehl’s for ease of comparison. (A note on presentation: As the reader will notice, Strehl’s chapters are followed by detailed descriptions of the chapters’ sections, very typical of books from that era. Walsh’s original table of contents includes only the main headings, although within the book similar sections to Strehl’s are designating sub-chapters. I have manually copied these sub-heading structure on the table below in lieu of scannable content.) Notice similarities on both books’ first chapters, between “the failure of the human brain – the machine begins to think – ‘Homunculus’ is born – the beginning of a new epoch” (Strehl 1955) and “Machines That Learn – Computers Do More Than They are Told – The Machine Advantage – Our Successor” (Walsh 2018).

Both books’ second chapters review technological advances of machine intelligence of their times: Strehl describes the abilities of early computing and memory storing machines ENIAC, Mark III, and UNIVAC, as well as the possibility of “automatic weapons.” Meanwhile, Walsh describes recent breakthroughs in game-playing such as Go, although his chapter 0005 is entirely dedicated to “killer robots,” “weapons of terror,” and “of error,” pretty much like Strehl’s penultimate chapter “The Beginning of the Future War” which contains sections like “Robots become soldiers,” “mechanical brains take over command.” (Interestingly, Walsh does not refer to any cases of factory worker accidents by robotic technologies, however Strehl mentions two cases of lethal robotic accidents on this chapter’s section “A Robot murders its Master” similar to newspaper headlines about robotic killers (for example, Huggler 2015, McFarland 2016, or Henley 2022).

Strehl’s third chapter asks, “Can the Robot Really Think?” in the same way that Walsh asks, “Should We Worry?” Both authors enquire on “The Age-Old Dream of an Artificial Human Being” (Strehl) and “Artificial General Intelligence – How Long Have We Got? – The Technological Singularity” (Walsh); and again, both refer to the question of “free will” in machines (Strehl: “Free will of the Machine?”; Walsh: “Zombie Intelligence […] The Problem of the Free Will”). Strehl dedicates two chapters to job displacement, “The Second Industrial Revolution,” focusing on industrial robotic technologies (“the Robots are in control – machine automatons replace worker battalions – Man [sic] is left out […] the factory without people”) and “The Dictatorship of the Automaton” mostly focusing on automation technologies conceptually similar to AI (“the automatic secretary […] the telephone Robot listens attentively – Robots keep books conscientiously – Robots sort telegrams […] the Robot as master detective […] the whole of mankind [sic] is filed – Robot salesmen [sic] in the department store […] divorce by ‘automatic’ court decision”). Although today’s equivalents (robot assistants like Alexa or Echo, robotic “judges,” and concerns about data surveillance) are much more technologically advanced, the sentiment captured in Strehl’s book is strikingly similar to several sections on Walsh’s: “The Real Number of Jobs at Risk, “Jobs Only Partly Automated – Working Less” (on the dangers of job automation), “Machine Bias – The Immoral COMPAS – Algorithmic Discrimination” (on the cases of automated decision making as in the robotic judge COMPAS), and “AI is Watching You – Linked Data” (on the case of surveillance).

By this point, it has become sufficiently clear that concerns about automation technologies which in different times can be termed as “AI” or “robots” (or different regional and research contexts; consider the “I’m not a robot” captcha version of a Turing test) have been sustained in a surprisingly similar degree of comprehension. It should be interesting to note some differences between the two books. First, it is useful to question how the authors gain what we might perceive as their promissory credibility, that is, the right to speculate about a new form of reality which is about to come. As already mentioned, Strehl falls short in terms of references – however, he sets out to clarify that the content presented is realistic: “This book is not about Utopia. It is a factual report of the present time collected from hundreds of sources. Nevertheless, throughout his book, Strehl refers to warnings about machine intelligence expressed by pioneering minds in the field, often citing cyberneticist Norbert Wiener, but also mathematician Alan Turing, and others. Walsh’s approach is stricter, methodologically speaking, matching contemporary standards:

“In January 2017, I asked over 300 of my colleagues, all researchers working in AI, to give their best estimate of the time it will take to overcome the obstacles of AGI. And to put their answers in perspective, I also asked nearly 500 non-experts for their opinion. […] Experts in my survey were significantly more cautious than the non-experts about the challenges of building human-level intelligence. For a 90 per cent probability that computers match humans, the median prediction of the experts was 2112, compared to just 2060 among the non-experts [...] For a 50 per cent probability, the median prediction of the experts was 2062. That’s where the title of this book comes from: the year in which, on average, my colleagues in AI expect humankind to have built machines that are as capable as humans.” (AGI stands for Artificial General Intelligence, that is, the hypothesis that AI might be reaching or surpassing human intelligence, for example Goertzel 2014.)

Although the two authors exhibit different strengths in showing their research skills, they both rely on the credence of external sources to sustain their argument. Moreover, they agree on the possibility of a rather inevitable new form of world which is, in part, already here, and will invite humanity to think of new forms of living in the nearby future. Their difference is in their degree of optimism. Strehl agrees with Walsh that machines will always remain in need of human controllers, however, suggests that machines will take control in a subtler way:

“Man [sic] will try to maintain his [sic] supremacy because the machines will always be limited creatures, without imagination and consciousness, incapable of inventiveness outside their own limits. But this supremacy of Man [sic] will only be an illusion, because the machines will have become so indispensable in an unimaginable mechanization of the technical civilization of the future that they will have become the rulers of this world, grown numb through technical perfection. The future mechanized order of society will not be able to continue existing without constant supervision of the thinking machines by their human creators. But the machines will rule.”

The following, more optimist, passage by Walsh can be read as a hypothetical response to Strehl:

“But by 2062 machines will likely be superhuman, so it’s hard to imagine any job in which humans will remain superior to machines. This means the only jobs left will be those in which we prefer to have human workers.”

Walsh then refers to the emerging hipster culture characterised by appreciation of artisan jobs, craft beer and cheese, organic wine, and handmade pottery.

One should not forget that Walsh’s public outreach on AI extends in part from his lens as an AI researcher. His book is one that admits challenges, but also offers hopeful perspectives. Strehl’s book is written in a rather polemic fashion, although admitting the author’s fascination about the technical advancements; yet it is written by an outsider who has probably not built any robots, at least as much as Walsh has developed algorithms. This difference of balance, small doses of warning followed by hopeful promising (Walsh) is opposed to small doses of excitement followed by dystopian futurism (Strehl). It is telling of the existence of the expectational environment of AI which evolves at least since the second half of the 20th century, with its roots in the construction of early automata, as well as in mythology, religion, and literature.

Strehl’s book can be classified as indicative of broader narratives circulating which might have influenced decisions within the domain of practice, although it is difficult to find evidence and make robust assumptions concerning ways in which such broader public narratives about robots, thinking machines, and how electronic brains have influenced the practical direction of research. Walsh’s book can be classified as a product of internal research practices and strategies, aimed at influencing broader narratives (the book’s popularity might be considered as evidence of some sort). The mutual themes between the books prove that the field (or vision) of intelligent machines (hereby examined as AI) is at the same time broad, yet recognisable and limited in its various instantiations, from automated decision-making to autonomous vehicles.

In this essay, I do not want to make another claim about history repeating itself and the “wow” effect of hype-and-disillusionment cycles – belief in a purely circular history is as reductionist as the belief in the modernist notion of linear progress and innovation. This instance of repetition of themes is not a call for a same old-same old caution that AI warnings are of no value because humanity’s previous experience proved so. It is, however, a call to raise awareness about hype, sensitivity about sensationalism, and to treat products of mass consumption about science and technology as artefacts produced by specific and variable social contexts on the micro-scale (such as institutional agendas) and rather generalised and constant aspects of psychological patterns on the macro scale: hope and fear. In 1980, Sherry Turkle concluded that the blackbox structure of computers, invoke to their users different projected versions of their optimism or pessimism, thus resembling inkblot tests; she thus treated “computers as Rorschach.” 42 years after Turkle’s paper, computers and robots have evolved a lot – however, despite numerous calls for explainable AI systems, nothing prevents us from treating “AI as Rorschach” as well as “robots as Rorschach.” This might amount to a creative and therapeutic endeavour in our experience with AI.

Bibliography

Goertzel, Ben. (2014). Artificial general intelligence: concept, state of the art, and future prospects. Journal of Artificial General Intelligence, 5(1), 1-46.

Heinz Nixdorf MuseumsForum (2017). Die Roboter Sind Unter Ins. Blog post. November 7, 2017. Retrieved 18-06-2021 from: https://blog.hnf.de/die-roboter-sind-unter-uns/

Henley, Jon (2022, July 24). Chess robot grabs and breaks finger of seven-year-old opponent. The Guardian. 24-07-2022. https://www.theguardian.com/sport/2022/jul/24/chess-robot-grabs-and-breaks-finger-of-seven-year-old-opponent-moscow

Huggler, Justin. (2015, July 2). Robot Kills Man at Volkswagen Plant in Germany. The Telegraph. Retrieved 3-07-2015 from http://www.telegraph.co.uk/news/worldnews/europe/germany/11712513/Robot-kills-man-at-Volkswagen-plant-in-Germany.html

McFarland, Matt. (2016, July 11). Robot’s Role in Killing Dallas Shooter is a First. CNN Tech. Retrieved 29-04-2017 from http://money.cnn.com/2016/07/08/technology/dallas-robot-death/index.html

Strehl, Rudolf. (1952 [1955]). The Robots are Among Us. London and New York: Arco Publishers.

Turkle, Sherry. (1980). Computers as Rorschach: Subjectivity and Social Responsibility. Bo Sundin (ed.). Is the Computer a Tool? Stockholm. Almquist and Wiksell. 81–99.

Walsh, Toby. (2018). 2062: The World that AI Made. Carlton: La Trobe University Press, Black Inc.

Walsh, T. (2021, July 20th). Personal website. UNSW Sydney, accessed 20, July 2021, <http://www.cse.unsw.edu.au/~tw/,>

Vassilis Galanos (October 2022). “Longitudinal Hype: Terminologies Fade, Promises Stay – An Essay Review on The Robots Are Among Us (1955) and 2062: The World that AI Made (2018).” Interfaces: Essays and Reviews on Computing and Culture Vol. 3, Charles Babbage Institute, University of Minnesota, 73-87.

About the Author: Vassilis Galanos (it/ve/vem) is a Teaching Fellow and Postdoctoral Research Associate at the University of Edinburgh, bridging STS, Sociology, and Engineering departments and has co-founded the local AI Ethics & Society research group. Vassilis’s research, teaching, and publications focus on sociological and historical research on AI, internet, and broader digital computing technologies with further interests including the sociology of expectations, studies of expertise and experience, cybernetics, information science, art, invented religions, continental and oriental philosophy.

Recently, the term “Quiet Quitting” has gained prominence in social media by employees who are changing their standards about work, and by business leaders who are concerned about the implications of this change of attitude and expectations at the workplace. The term initially started trending in social media with the posts from employees sharing their perspective. These employees are vocal about changing the standards of achievement and success at work, especially when work and home boundaries are no longer clear.

Quiet Quitting is a call from employees who still value their work but also wanted to feel valued and trusted in return. This is a call from those whose work and personal life is not balanced and who are looking for a healthier way to set boundaries. This is a reaction to the changes caused by the pandemic which allowed some employees to work from home, but which also further blurred the lines between work and home space. This is about corporations finding a multitude of ways to ensure their employees are connected to work around the clock, and is about the workers not wanting to be available to their employers for time for which they are not compensated, or work for which they are not recognized. It should not be a reason to criticize, shame, scare or surveil employees.

The pandemic caught many organizations unprepared for a sudden shift to remote work arrangements. Employers who were worried about the performance levels of their now-remote workers implemented several measures – some more privacy-invading than others. Unfortunately, for many companies, the knee-jerk reaction was to implement employee monitoring (or surveillance) software, sometimes referred to as ‘bossware’. Vendors selling this software tend to pitch their products as capable of achieving one or more of the following: ‘increase in productivity/performance; prevention of corporate data; prevention of insider threat; effective remote worker management; data-based decision-making on user behavior analytics; sentiment analysis to identify flight-risk employees.” The underlying assumptions of this thread of functions are:

- employees cannot be trusted and left to do what they are hired to do;

- human complexity can be reduced to some data categories; and

- a one-size-fits-all definition of productivity exists, and the vendor’s definition is the correct one.

In response to employees who suggest they will only do what they are hired to do and not more until the expectations are changed, AI-based employee surveillance systems are now being discussed as a possible solution to those ‘Quiet Quitting’. Employee surveillance was never a solution for creating equitable work conditions or increasing performance in a way which respected the needs of employees. It certainly cannot be a solution to the demands of workers trying to stay physically and mentally healthy.

The timing of tasks and employee activity monitoring in assembly lines and warehouses goes back to the times of Winslow Taylor. Taylorism aimed to increase efficiency and production and eliminate waste. It was also based on the “assumptions that workers are inherently lazy, uneducated, and are only motivated by money.” Taylor’s approach and practice has been brought to its contemporary height by Amazon with its minute-by-minute tracking of employee activity and termination decisions made by algorithmic systems. Amazon uses such tools as navigation software, item scanners, wristbands, thermal cameras, security cameras and recorded footage to surveil its workforce in warehouses, delivery trucks and stores. Over the last few years, employee surveillance practices have been spreading into white and pink-collar work too.

According to a recent report by The New York Times, eight of the ten largest private U.S. employers track the productivity metrics of individual workers, many in real time. The same report details how employees described being tracked as “demoralizing,” “humiliating” and “toxic” and that 41% of employees reporting nobody in their organization communicates with them about what data is collected and why or how it’s being used. Another 2022 report by Gartner shows the number of large employers using tools to track their workers doubled since the beginning of the pandemic to 60%, with this number expected to rise to 70% within the next three years.

Employee surveillance software is extensive in its ability to capture privacy-invading data and make spurious inferences regarding worker performance. The technology can log keystrokes or mouse movements; analyze calendar activity of employees; screen emails, chat messages or social media for both the activity intervals and content; take screenshots of the monitor at random intervals; analyze which websites employee has visited and for how long; force activations of webcams; and monitor the terms searched by the employee. As an article in The Guardian on AI-based employee surveillance tools explains, the concerns regarding the use of these products range from the obvious privacy invasion in one’s home to reducing workers, their performance, and bodies to lines of code and flows of data which are scrutinized and manipulated. Systems which automatically classify a worker’s time into “idle” and “productive” reflect the value judgments of their developers about what is and is not productive. An employee spending time at a colleague’s desk explaining work or mentoring them for better productivity, can be labeled by the system as “idle”.

Even though natural language processing is not capable of understanding context, nuance or intent of language, AI tools which analyze the content and tone of one’s emails, chat messages or even social media posts ‘predict’ if a worker is a risk to the company. Forcing employees who work from home to keep their camera on at all times can lead to private and protected information of the employee to be disclosed to the employer. Furthermore, these systems remove basic autonomy and dignity at the workplace. They force employees to compete rather than cooperate and think of ways to game the system rather than thinking of more efficient and innovative ways to do their work. A CDT report focuses on how bossware can harm workers’ health and safety by discouraging and even penalizing lawful, health-enhancing employee conduct, enforcing a faster work pace and reduce downtime, which increases the risk of physical injuries, and increasing risk of psychological harm and mental health problems for workers.

Just as employee surveillance cannot replace trusting and transparent workplace relationships, it cannot be a solution to Quiet Quitting. Companies implementing such systems do not understand the fundamental reasons of this call. The reasons for such a call are not universal and there is no single solution for employers. The responses may change from fairer compensation to better communication practices to investment into employee’s skills to setting boundaries between work and personal life. Employers need to create space for open communication and understand the underlying reasons for frustration and the call for change. Employees need to ‘hear’ what their employees are telling them, not surveil.

==============================

Disclosure: The author also provides capacity building training and consulting to organizations for AI system procurement due diligence, responsible design, and governance. Merve Hickok is a certified Human Resources (HR) professional with 20 years of experience, an AI ethicist and AI policy researcher. She has written extensively about difference sources of bias in recruitment algorithms, impact on employers and vendors, AI governance methods; provided public comments for regulations in different jurisdictions (New York City Law 144; California Civil Rights Council, White House Office of Science and Technology RFI), co-crafted policy statements (European Commission) and contributed to drafting of audit criteria for audit of AI systems (ForHumanity), and has been invited to talk in a number of conferences, webinars and podcasts on AI and recruitment, HR technologies and impact on candidates, employers, businesses and future of work.; was interviewed by both HR professional organizations (SHRM Newsletter, SHRM opinion pieces) and by newspapers (The Guardian) about her concerns and recommendations.

Bibliography

Bose, Nandita (2020). “Amazon's surveillance can boost output and possibly limit unions.” – study. Reuters, August 31.

Corbyn, Zoe (2022). “‘Bossware is coming for almost every worker’: the software you might not realize is watching you.” The Guardian, April 27.

Kantor J, Sundaram A, Aufrichtig A, Taylor R. (2022). “The Rise of the Worker Productivity Score.” New York Times, August 14.

Schrerer, Matt and X. Z. Brown, Lydia (2021). “Report – Warning: Bossware May Be Hazardous to Your Health.” CDT, July 24. Turner, Jordan (2022). “The Right Way to Monitor Your Employee Productivity.” Gartner. June 09.

Williams, Annabelle (2021). “5 ways Amazon monitors its employees, from AI cameras to hiring a spy agency.” Business Insider, April 5.

Wikipedia. Digital Taylorism. https://en.wikipedia.org/wiki/Digital_Taylorism.

Merve Hickok (September 2022). “AI Surveillance is Not a Solution for Quiet Quitting.” Interfaces: Essays and Reviews on Computing and Culture Vol. 3, Charles Babbage Institute, University of Minnesota, 65-72.