Computational Astrophysics

The LCSE, under the direction of Paul Woodward, is engaged in a wide range of research projects. The lab focuses mainly upon collaborative projects between the government, industry, K-12 schools, and the University's Institute of Technology. Examples of research done by Tom Jones and his students at the Minnesota Supercomputing Institute include simulations of galaxy clusters, evolution of turbulence, radio galaxy dynamics and cosmic ray acceleration and transport.

Computational Astrophysics at the Minnesota Supercomputing Institute (MSI)

Research Topics

Tom Jones – Computational Fluid Dynamics and Particle Acceleration

Paul R. Woodward – Simulating Stellar Evolution

Tom Jones

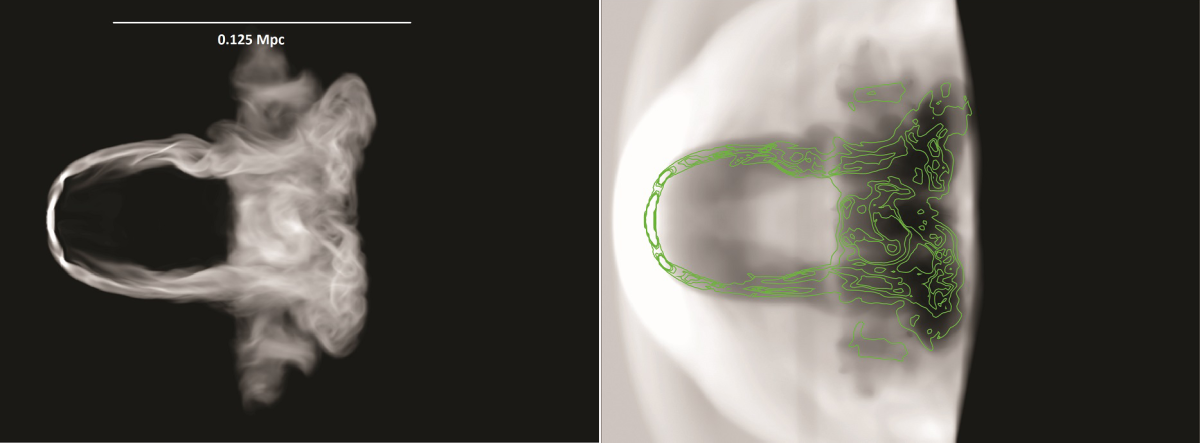

I am a theoretical astrophysicist whose efforts center on dynamics of magnetized fluids and plasmas, along with associated acceleration and transport of energetic particles (cosmic rays) in multiple contexts. Much of our work involves numerical simulations carried out using codes developed in our group. So, a substantial part of our computational efforts go into development and testing of computational tools. The example images, derived from one of our MHD simulations of a cluster-like shock wave interacting with a radio galaxy, shows in the upper panel the predicted 150 MHz radio synchrotron intensity from cosmic ray electrons and, in the lower panel, the associated 1-10 keV thermal X-ray emission (with radio contours taken from the upper image). In this case the shock propagated left to right and orthogonal to the AGN jets forming the radio galaxy.

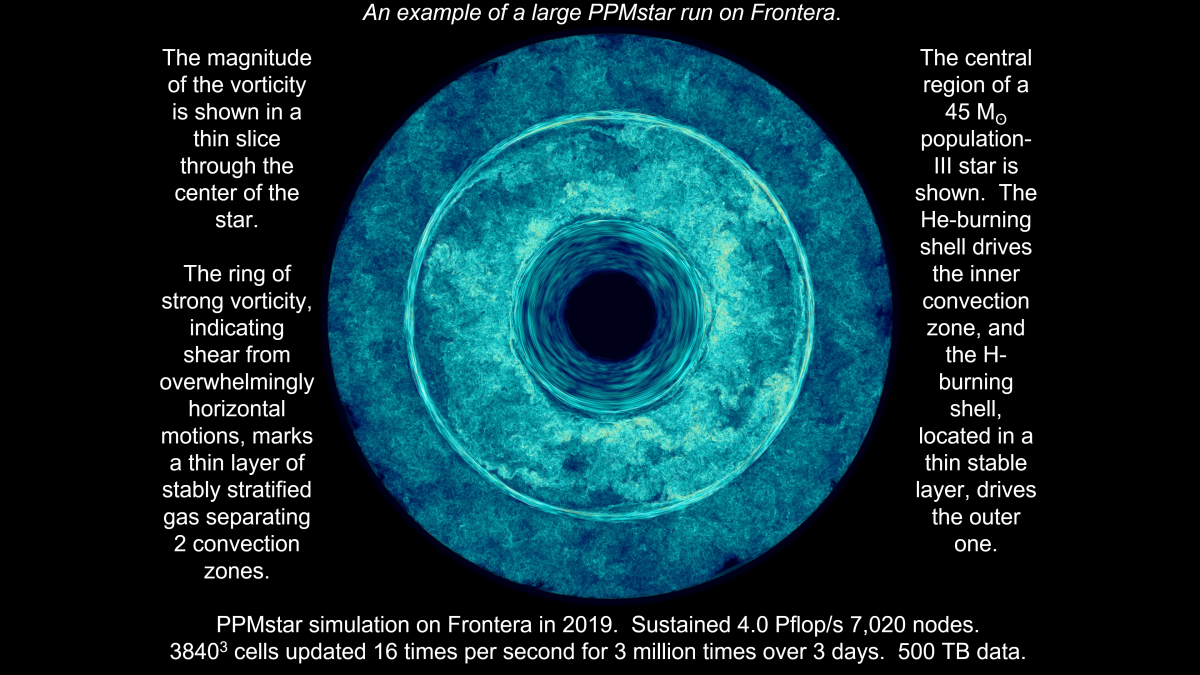

Paul R. Woodward

Professor Paul Woodward has developed a series of numerical methods for multifluid gas dynamics that enable his research and which are also in use in a variety of community codes, such as FLASH and ENZO. As the director of the University’s Laboratory for Computational Science & Engineering (LCSE) within the Digital Technology Center, Woodward has led a number of collaborations over the years with national laboratories and industry that have advanced computing technologies and the ability of simulation codes to exploit them. Woodward chaired the Science and Engineering Team Advisory Committee (SETAC) for the NSF’s Blue Waters Track-1 computing project from 2013 to 2019. NSF has now shifted its premier national computing project to Frontera, at the Texas Advanced Computing Center, and Woodward has moved his simulation work to that center. In his recent research, Woodward has used the entire Frontera machine to simulate the interaction between two overlying convection zones in a massive star that are separated by only a very thin layer of stably stratified material (cf. www.lcse.umn.edu). How a star behaves in this sort of situation has been an outstanding problem in stellar evolution theory for decades. Running on 7020 nodes of the Frontera machine at over 4 Pflop/s, a description of the star (see figure) on a grid of 56.6 billion cells is updated 16 times per second by Woodward’s latest simulation code, PPMstar. Running at this scale and speed enables simulation of important stages in stellar evolution that are brief, but not as brief as an explosion. The advent of still larger and faster machines, such as Argonne National Lab’s Aurora in 2021, will further expand our ability to simulate such events in stellar evolution in detail and with confidence that the results are accurate. These new machines pose substantial challenges to simulation code design, because of their integration of advanced CPU and GPU technologies as well as their capacity to generate datasets exceeding 1 petabyte per simulation. One focus of Woodward’s research in computational astrophysics is how to meet these challenges and thus to harness the tremendous power of the coming generation of exascale computing systems.