MURAJ: In Focus - Application of Artificial Intelligence to Help Fight COVID-19

Written by Gaoxiang Luo for the Minnesota Undergraduate Research & Academic Journal (MURAJ)

Artificial intelligence (AI) is gradually changing modern medical practice. With recent progress of deep learning, which is a broader family of machine learning, AI applications are expanding into medical areas that were previously thought to be only the province of human experts. In February of 2020, radiologists found that patients’ chest x-rays had a high predictive power to diagnose coronavirus (COVID-19) and a group of researchers led by Computer Science & Engineering assistant professor Ju Sun started to work on an AI algorithm to improve diagnosis of COVID.

Le Peng, a Ph.D. student working Professor Sun, met with Minnesota Undergraduate Research and Academic Journal (MURAJ) to discuss the technical details of this AI algorithm that may intrigue undergraduate students who are interested in deep learning, as well as elaborated on his experience of doing research under the unprecedented global pandemic.

Le has been passionate about machine learning and computer vision since he was an undergraduate student, which motivated him to go to graduate school for further academic pursuit. People like Le in the discipline of computer vision believe that machines see things that we do not. Before the global pandemic, Le’s research was motivated by the fact that fractured ribs are a challenging task for even expert radiologists to visually identify, and he had been working closely with M Health Fairview to build an automatic detection system for rib fractures. But as COVID-19 arrived, he realized that the detection of viral symptoms and the detection of rib fractures are very similar, with the only difference being the respiratory symptoms instead of the location of rib fractures. Then, he worked on this AI algorithm until it was deployed into all 14 M Health Fairview hospitals as well as 450 other health institutions in the US with the help of Epic System Corp., a medical software company. This fast roll-out was because of the rapid, accurate image interpretation of AI to help identity the COVID-19 (see Figure 2) when there was a limited number of testing kits available.

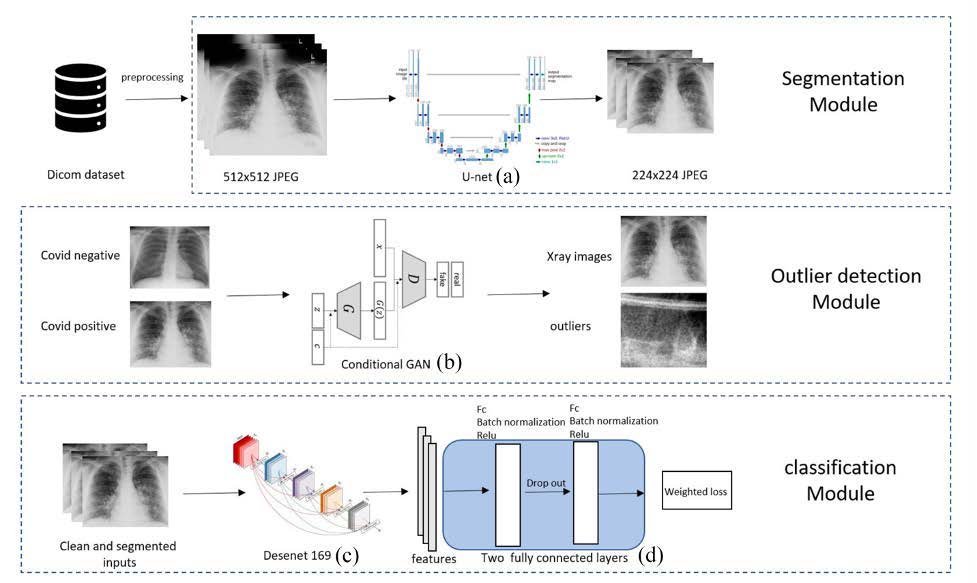

This AI algorithm is a modern deep learning approach, which consists of one neural network at each module (see Figure 2). A neural network is a set of functions, modeled loosely after the human brain, that is designed to recognize patterns. The AI algorithm is mainly composed of three stages: the first two stages perform data cleaning, and the last stage predicts how likely a patient is to have COVID-19. Le explained that two stages of data cleaning are necessary because “medical data is messy, and more than 70% of the time you’re doing data preprocessing.” For instance, there are potentially some failed and even mislabeled x-ray images in the database, as well as hundreds of metadata tags in a single x-ray that are not useful for the algorithms. Also, deidentification of the x-ray image is important and necessary to protect patient privacy. Le further explained that accessing industry data is quite different from the clean and processed data given in a classroom setting. When building models like this, you would find most of the time you are cleaning your data before applying any algorithms.

The data cleaning is achieved by teaching the machine to do so and it is useful because it may take a considerable amount of human effort to manually clean instead. The AI algorithm starts with a segmentation module called U-Net that is used to accurately segment the lung area of chest x-rays. Its name is U-Net simply because it looks like a U-shape (see Figure 3 (a)). The radiologists noticed that the visual patterns related to COVID-19 mostly fell on the lung regions of the chest, with similar visual characteristics with ground glass opacity and consolidation. By recognizing these patterns, the machine could focus on learning the visual information only from the lungs by cutting out all the unnecessary regions, and that is why the images are smaller in the resolution after applying the U-Net on the segmentation module in Figure 3.

The next stage is to remove the outliers including failed x-rays with artifacts or wrong projections. Notably, rather than an expert going through plenty of x-rays, the filtering stage is done by a neural network called conditional Generative Adversarial Network (GAN) to distinguish outliers from valid x-rays. The idea behind GAN is to build a filter by having two parties – a generator and a critic. The generator creates a fake x-ray that is as real as it can possibly create, and the critic gives the feedback to the generator so that it can create a better one next time. By doing so, the GAN will be critical enough to tell which x-ray is a valid input for the classification stage (see Figure 3 (b)).

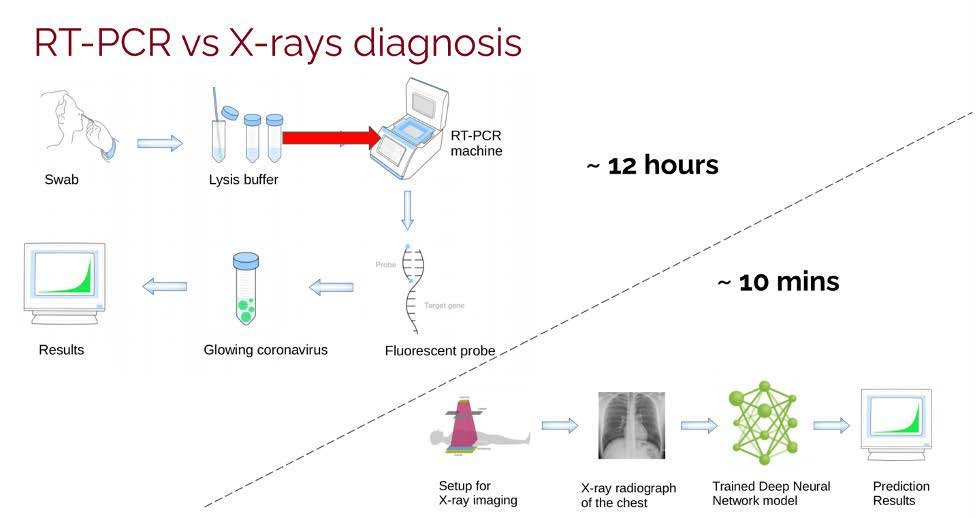

Once the data is cleaned and segmented by the first two stages, Le stated that the last and the most important stage of this AI algorithm is the classification module (see Figure 3 (c)). Now, all the inputs are passed through a computationally expensive and complex neural network for training the classifier, and the classifier is exactly where the pipeline learns the pattern of COVID-19 symptoms and predicts it. The reason the researchers say that the neural network is complex is that it works really well on medical images, but they do not really understand why. Because of this, the neural network approach is also known as a black-box approach. Once complete, this entire pipeline predicts the probability of having COVID-19 from the raw chest x-ray. By having this model, you can take a chest x-ray and your doctor will be informed of the likelihood of COVID-19 within 10 minutes, which helps them to diagnose the virus more accurately and efficiently.

When compared to the typical nasal swab test, this AI algorithm can achieve a relatively similar area under the precision-recalled curve (AUPRC) but in a much shorter amount of time. The AUPRC is a 2D diagram where the horizontal axis is recall and the vertical axis is precision. More specifically, the recall asks what proportion of actual positives were identified correctly, while the precision asks what proportion of positive identifications were actually correct. Le clarified that researchers typically do not use accuracy as a metric because medical data is usually imbalanced, which means there are always more negative cases than positive cases. In addition, doctors usually weight a certain metric more than the others. For instance, they are more concerned about a false negative in COVID-19 than a false positive, because they do not want to misdiagnose any patients.

When asked if the AI algorithm would fail for patients that have COVID-19 without symptoms in their lungs, Le noted the hypothesis was similar to mild cases in their experiments. Indeed, the lungs of mild cases look similar to healthy lungs; however, the model is not deterministic for diagnosis but rather produces a likelihood of having COVID-19 to doctors so they can make a better decision. Le further explained that it is also difficult for human experts to diagnose mild cases solely from the chest x-rays. Nevertheless, as Le said, the AI algorithm is robust enough in predicting a high likelihood of having COVID-19 for moderate and severe cases, and more than 90% of patients identified as having COVID-19 actually had the virus, which was proved in their internal testing stage on July 2020 before the AI algorithm was deployed to hospitals. The whole prediction process, including the time for X-ray imaging, only takes a patient approximately ten minutes to obtain a result. At the same time, the RT-PCR machine (used for most COVID-19 tests) needs around 12 hours to produce a result (Figure 2). When asked how to ensure this AI algorithm could also work at other health institutions where the x-ray generators might be made by different vendors and the patients might have different demographic patterns, Le answered that they had performed external validation, which proves that the model also works on other hospitals with only a small amount of new data coming in to fine-tune the model. That is to say, the algorithm can learn from a small amount of new data and adjust itself accordingly to have a better performance at these different institutions.

When asked about one of the challenges to do research under the pandemic, Le summarized it into one word – communication. It is not easy to reach out to other people via Zoom, but he encourages students to stay active and follow different professors’ recent research by reading their websites or recent publications. He also encourages students not only to focus on their areas of study but think of an interdisciplinary field where you could bring two groups of perspective students together. At the end, Le briefly described a couple of future promising direction in the field of computational medical imaging for active research. The neural network, including the AI algorithm in this article, still remains a black box approach, and researchers know it works well but do not really understand why. Therefore, a future aspiration of Le is to research AI trustworthiness and interpretability. Moreover, Le found some preliminary results showing that a shallow neural network could potentially reach the same performance as a deep neural network in medical imaging. If successful, this method would help employ the model into small and even portable devices and make it available to areas where computational resources are scarce.

This profile is a part of the series MURAJ In-Focus, organized by the Minnesota Undergraduate Academic & Research Journal, with funding from the University of Minnesota Office of Undergraduate Education.

Le Peng is a second-year Ph.D. student in Computer Science at University of Minnesota advised by Prof. Ju Sun. His research interests are AI in health care, computer vision and machine learning.

Gaoxiang Luo is a third-year undergraduate student in Computer Science. His current research projects involve rib fractures detection on CT scans and wetland mapping on aerial imagery. He serves as a

reviewer for Minnesota Undergraduate & Academic Journal.