Research update: Vision-based AI systems

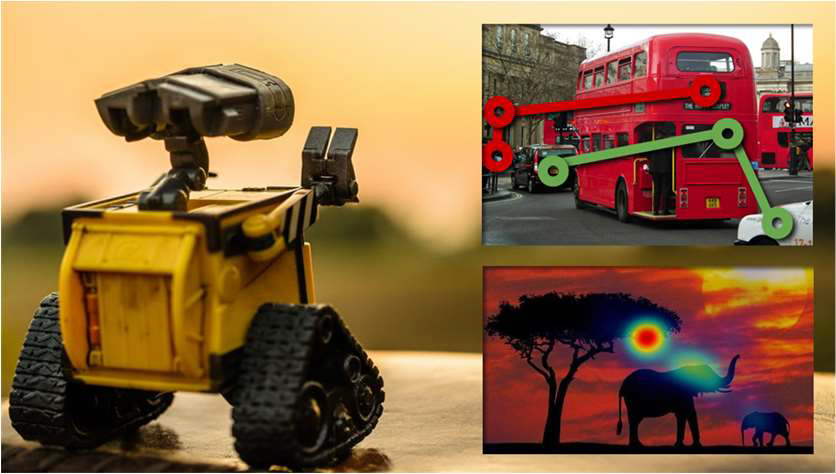

Upper right: Newly invented deep learning and explainable AI models add vision and reasoning capabilities to machines, and can distinguish correct (green) and incorrect (red) decision making.

Lower right: Deep learning models reveal intricate eye-movement patterns from people on the autism spectrum, revolutionizing mental health screening.

Recent breakthroughs in artificial intelligence, including both algorithms and infrastructure, make it possible for machines to assist humans in daily tasks. However, there is still an apparent gap between existing AI and what was pictured in science fiction. One fundamental limitation is the inability of machines to ‘see’ what’s happening in the world around them.

To bridge this gap, Assistant Professor Catherine Zhao and her lab, the Visual Information Processing Lab (UMN VIP), are dedicating a large portion of their research to the development of vision-based AI and its emergent applications.

Visual artificial intelligence

Imagine that you are at a bus stop in a new city. You take a few glimpses around, parse and summarize the information you gather, and decide on the next steps. Humans are very efficient at actively selecting and parsing the most relevant information from the environment. Although intuitive, it implies a highly sophisticated and superior ability that machines lack, which can enhance machine performance and support the creation of machine intelligence.

Researchers in the UMN VIP lab have developed and integrated the attention capability in AI agents for them to acquire, learn, and reason with the information, and to perform a wide range of tasks. They have also invented experimental paradigms that became today’s standard approaches to collect big attention data, and contributed multiple large-scale datasets that promoted deep learning research and model development [1, 2].

Explainable and generalizable vision

The increasing attention on black-box machine learning has highlighted the challenges of making sense of the way complex machine learning algorithms make decisions. In response, Professor Zhao's team has developed methods to understand and visualize deep neural networks, and more recently, has focused on quantifiable approaches for explainable and generalizable AI. For example, they recently designed a mathematically-defined metric to quantify the reasonability of AI models, and developed a computational framework that teaches machines how to perceive and reason with common sense knowledge to make decisions, enhancing both the interpretability and generalizability of AI systems [3].

Vision-based AI systems in healthcare applications

The lab's AI contributions have been shared in a broader context that amplifies its impact. They have been developing and applying their theories, algorithms, and data to solve problems in healthcare, leading to new discoveries, tools, and clinical prototypes.

For example, their innovative experimental methods and deep learning models “open new avenues for quantitative, objective, simple, inexpensive, and rapid evaluation of mental health”, and “revolutionize mental health screening”, as quoted from a Neuron Preview that discussed the research of deciphering the neurobehavioral signature of autism. In another example, the team's novel deep learning technique overcomes unaddressed challenges with highly convoluted and dynamic human neural data and achieves groundbreaking intention decoding that helps our amputee patients “get back to natural hand” [4].

[1] M. Jiang*, S. Chen*, J. Yang, and Q. Zhao, “Fantastic Answers and Where to Find Them: Immersive Question-Directed Visual Attention”, CVPR

2020. *Equal authorship.

[2] Y. Luo, Y. Wong, M. Kankanhalli, and Q. Zhao, "Direction Concentration Learning: Enhancing Congruency in Machine Learning", TPAMI 2020.

[3] M. Jiang*, S. Chen*, J. Yang, and Q. Zhao, “AiR: Attention with Reasoning Capability”, ECCV 2020. *Equal authorship.

[4] S. Wang*, M. Jiang*, X. M. Duchesne, E. A. Laugeson, D. P. Kennedy, R. Adolphs, and Q. Zhao, “Atypical Visual Saliency in Autism Spectrum

Disorder Quantified through Model-based Eye Tracking”, Neuron (Featured on the Cover) 2015.