Paper on rethinking mental health interventions recognized at CSCW

Congratulations to GroupLens researchers for earning an Honorable Mention award at this year's ACM Conference on Computer-Supported Cooperative Work and Social Computing (CSCW).

The award-winning paper introduces Flip*Doubt, a crowd-powered prototype for mental health, and suggests that systems should be built to help expand models of delivery for mental health treatment by coming up with better, safer, and more targeted ways to help peers deliver effective and clinically validated therapeutic interventions.

C. Estelle Smith (Ph.D. 2020) and William Lane (M.S. 2020) were co-lead authors on the paper. Other GroupLens researchers, including Hannah Miller Hillberg (Ph.D. 2018), Daniel Kluver (Ph.D. 2018), Loren Terveen (professor), and Lana Yarosh (associate professor) also contributed to the work.

"We’re honored that this paper won an Honorable Mention at CSCW 2021, since the world truly needs new interventions like Flip*Doubt to help us all to help each other," shared Smith, who is now a postdoc at the University of Colorado Boulder in the College of Media, Communication and Information.

CSCW is the leading conference for presenting research in the design and use of technologies that affect groups, organizations, and communities, and brings together top researchers and practitioners from academia and industry who are interested in both the technical and social aspects of collaboration.

How crowd-powered therapy can help everyone help everyone

Below is a blog post written by C. Estelle Smith that explains the idea behind Flip*Doubt and the results of the deployment in more detail.

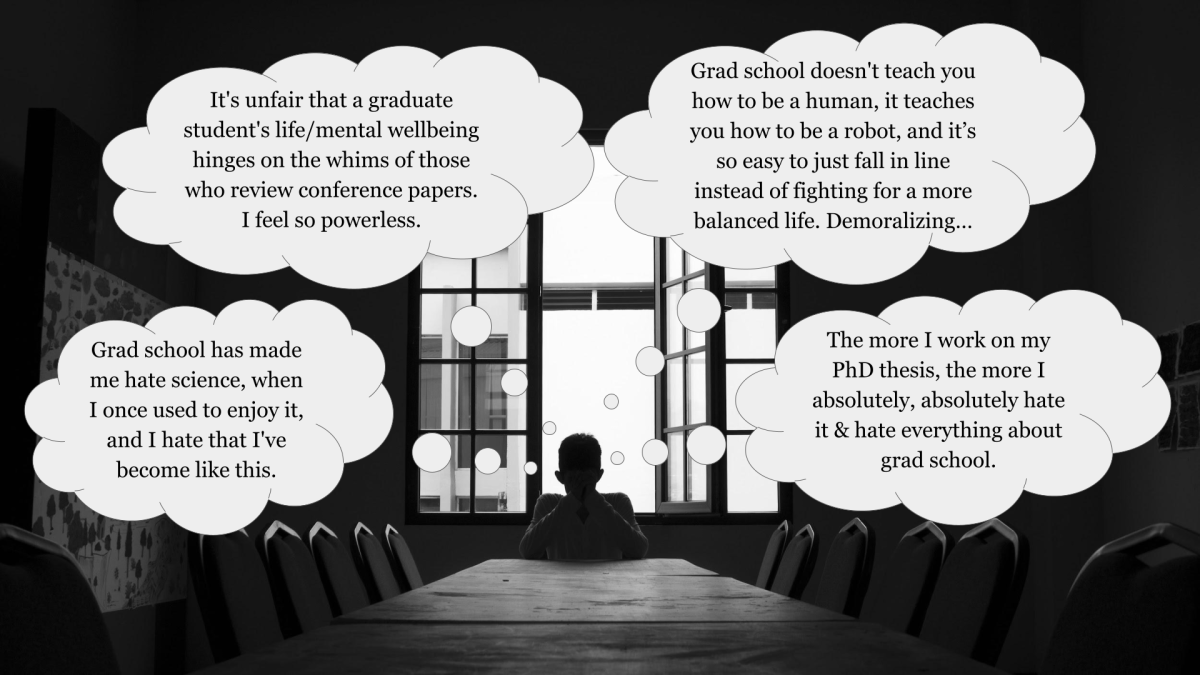

We all have dark thoughts sometimes. And if you’ve ever been a graduate student, perhaps thoughts like the following feel familiar:

The thoughts in this image are real data points collected during deployment of a prototype called Flip*Doubt, an app in which negative thoughts are entered and then sent to three random crowd workers to be “positively reframed” and sent back to the user.

Rates of mental illness continue to rise every year. Yet there are nowhere near enough trained mental health professionals available to meet the need—and COVID-19 has only worsened the state of affairs. In short—we urgently need to rethink how we design mental health interventions so that they are more scalable, accessible, affordable, effective, and safe.

So, how can technology create new ways to expand models of delivery for clinically validated therapeutic techniques? In Flip*Doubt, we focus on “cognitive reappraisal”–a well-researched technique for changing one’s thoughts about a situation in order to improve emotional wellbeing. This skill is often taught by trained therapists (e.g., in Cognitive and Dialectical Behavioral Therapy), and it has been shown to be highly effective at reducing symptoms of anxiety and depression. The problem is, it’s really hard to learn, and even harder to apply in one’s own mind on an ongoing basis.

We envision that people could learn the skill through practice by reframing thoughts for each other–since research shows that it’s easier to learn by objectively sizing up others’ thoughts, rather than immediately trying to challenge your own entrenched ways of thinking. Thus, Flip*Doubt relies on crowd workers to create reframes, and the major driving questions of our study were: What makes reframes good or bad? And how can we design systems that effectively help people to nail the skill?

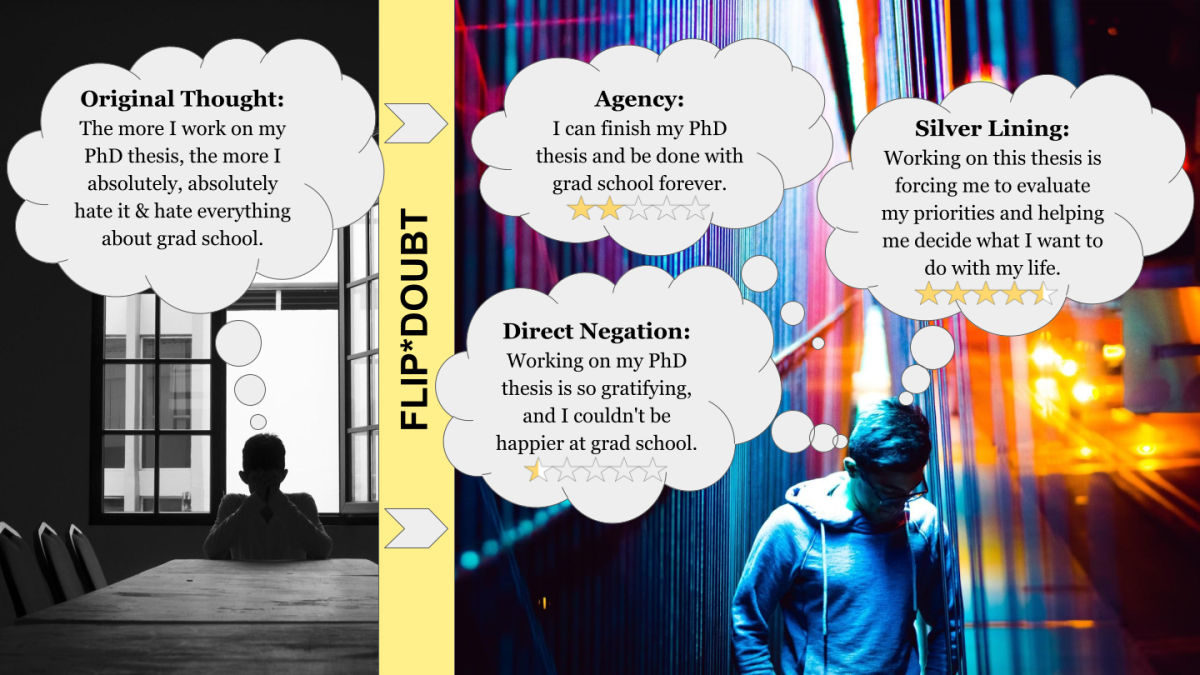

Our deployment yielded some fascinating results about how people use cognitive reappraisal systems in the wild, the types of negative thought patterns that weigh grad students down, and what types of strategies are most effective at flipping dark thoughts. For instance, the example below shows how a participant rated three reframes from Flip*Doubt:

Represented here are three different reappraisal tactics for transforming the original thought that we identified through our data analysis. “Direct negation” isn’t effective at all–it’s just invalidating and frustrating for someone to suggest the opposite of what you’re struggling with. “Agency” rings more true–yet can feel a bit simplistic. “Silver Lining” wins the gold for this thought–it provides fresh perspective by emphasizing an important positive that wouldn’t be possible without all the struggle. Our paper provides additional analyses, culminating in six hypotheses for what makes an effective reframe.

Our work suggests several important design implications. First, systems should consider prompting for structured reflection rather than prompting for negative thoughts. People aren’t always thinking negatively, and only allowing negative thoughts for input can reinforce those thoughts, or drive people away. Second, systems should consider tailoring user experiences to focus on a few core issues, since the best gains may come if meaningful progress can be made to address vicious and repetitive thoughts, rather than any old negative thought. Finally, crowd-powered systems can be safer and more effective if we design AI/ML-based mechanisms to help peers shape their responses through effective reappraisal strategies and behaviors—there’s a lot more on this in the paper, so we hope you’ll read about it there.